Video made using the PIC/FLIP solver we'll implement in this tutorial!

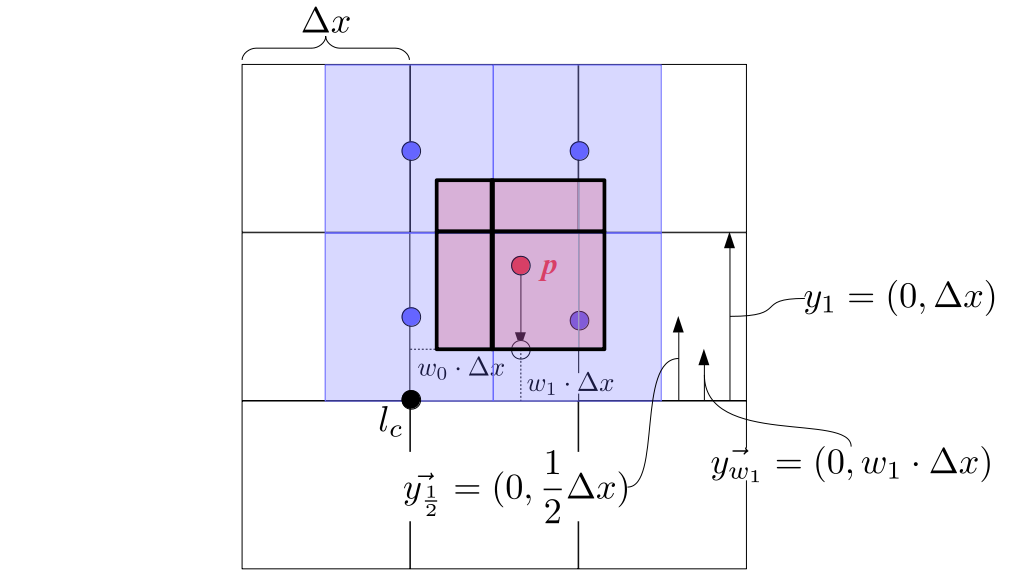

Physics-Based Simulation & Animation of Fluids

Do you ever wonder how special effects using fluids like water are animated for movies? In this tutorial, we'll show you how to simulate fluids using the popular Particle-in-Cell / Fluid-Implicit-Particle (PIC/FLIP) method. In this tutorial, we will:

- write all the code, from scratch, for a physics-based fluid simulation,

- derive our code implementation from a widely used mathematical model of fluid physics,

- install minimal, widely available free software,

- not skip any steps, and

- follow professional code style and testing practices.

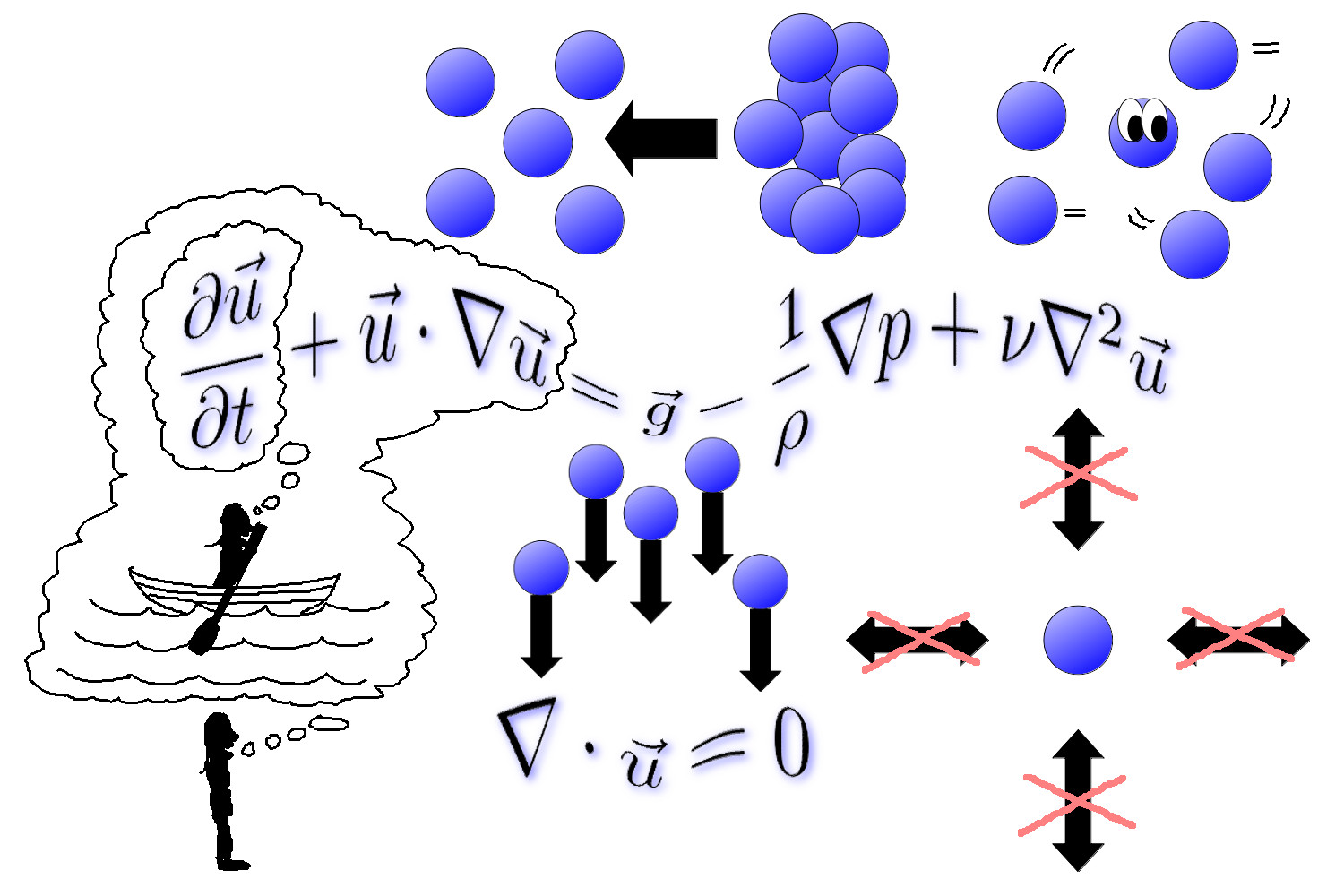

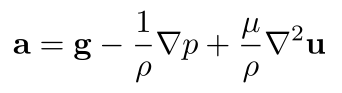

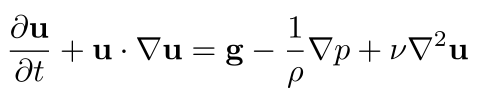

The mathematical model of fluid physics driving this tutorial are the Navier-Stokes equations, which we'll personify with this image, without explanation, for now. We'll dive into the details of these equations when introducing fluid mechanics below.

Acknowledgments

This tutorial expands on the fluid simulation portion of the excellent SIGGRAPH course on physics-based animation created by Dr. Adam Bargteil and Dr. Tamar Shinar. This tutorial also references the excellent educational materials on fluid simulation put together by Dr. Rook Bridson and Dr. Matthias Müller-Fischer as well as Dr. Bridson's book on fluid simulation. These resources are tremendously useful for making physics-based simulation and animation accessible to a wide audience and I merely hope to add some details and illustrations on top of these awesome contributions by others. We will cite all of these materials frequently throughout this tutorial! I highly recommend going through these materials if you are at all curious about physics-based simulation for computer graphics.

Background

This tutorial is designed assuming a year or two of experience in or exposure to:

- Computer programming in C++

- Using a command-line terminal on a computer running Linux, e.g., the Ubuntu operating system

- Newtonian mechanics, e.g., F = ma, momentum, position, velocity, acceleration

- Calculus: differential, integral, and vector calculus

Some exposure to computer graphics helps but is certainly not required. If you're a second- or third-year computer science college student, you have more than enough background! If you haven't gone to college, haven't studied computer science, don't remember much math or physics, or this list just feels overwhelming in general, that's okay! Feel free to follow along and look up and/or skip lots of things! You don't have to understand everything, by any means, to gain something from this tutorial. Other than access to a computer (yes, this can be a challenge) and at least an occasional Internet connection, everything else this tutorial requires is freely available!

What to Install

This tutorial will use the C++ programming language. We will use the Open Graphics Library (OpenGL) and the associated OpenGL Utility Toolkit (GLUT) to display graphics on the computer screen. This is freely available software widely used for displaying graphics on computer screens.

From this point onward, I'll assume you're using a computer running Ubuntu 16.04 or 18.04. I'll also assume your computer account has permission to install new software on that computer, or if not, that someone else can do it for you. If you're running another operating system, such as Windows or macOS, you should be able to follow along but with some small changes to commands and the code.

Open a Terminal (CTRL+ALT+T on Ubuntu). Type this command and press Enter:

g++ --version

You should see some text showing the version of g++, the program we'll use to compile our code, that is installed on your computer. If not, look up how to install it. For our purposes, which version of the compiler you install should matter much.

Next, in a Terminal (the same one as before is fine!), type this command and then press Enter to run it:

sudo apt install freeglut3-dev

If you're asked to, enter your password and press Enter. Once the computer finishes installing OpenGL and GLUT, figure out where it was installed. I found this out by typing something like ls /usr/lib/x86_64-linux-gnu/libgl* and pressing the [TAB] key twice, causing the Terminal to list a whole bunch of files with names starting with libglsomething including libglut.something. So, my OpenGL and GLUT libraries were installed in /usr/lib/x86_64-linux-gnu/. Feel free to search online for how to find where OpenGL and GLUT were installed on your computer if you're not sure.

This is not required but I highly recommend installing clang-format, a program used by many software developers in industry to format their code in a consistent style. We'll use this program to format our code in Google's style. To install it, run this command in a Terminal and press Enter:

sudo apt install clang-format-10

It's okay to use another version of this program; I just happen to be using version 10 at the moment.

Now, create a directory (folder) somewhere on your computer where you want to store all the code you write as part of this tutorial. You can and will make multiple subdirectories in there as the tutorial proceeds, but it's good to have one parent directory that holds everything. cd to that parent directory in a Terminal window. Then type this command and press Enter:

clang-format-10 -style=Google -dump-config > .clang-format

This creates a "hidden" file (meaning you can see it by running the ls -a command, but not the plain ls command) in this directory that contains your default settings consistent with the Google style for the clang-format program. Here is my .clang-format file. If for some reason you want to keep your code in multiple disparate places on your computer, you can just re-run this command in each of those directories.

Why Physics?

Why do we use physics and math to derive code that simulates a fluid? It's actually quite hard to animate fluid motion in a way that looks believable enough for movie viewers. It's also become more feasible as computers have become more powerful, to simulate accurate physics rather than try to fake it.

To make physics into something we can compute, though, we must use math. In physics, the terms "mechanics" or "dynamics" typically refer to the mathematical relationships between motion (kinematics) and the forces (kinetics) that generate that motion. You'll often hear the terms "fluid mechanics" and "fluid dynamics" used interchangeably. The whole point is to determine how forces lead to motion, mathematically, so that a computer can do that math and simulate that motion for animation. Fluid simulation is also very important in scientific applications, including weather prediction.

Viewing Geometry Using OpenGL

OpenGL is a general framework that allows us to do graphics programming. To use it to display geometry effectively, we need to set up some initial working code and understand a bit about how OpenGL code manages what we see on the screen.

Hello, Sphere!

Our first program will be a single file containing code in the C++ programming language. When we run this program, if everything is working, a window will pop up on the computer screen displaying a single, red, boring sphere. It's so boring, in fact, that it won't even look three-dimensional: it'll just look like a filled-in red circle.

Make a directory called basic inside the directory that'll house all your code for this tutorial. Okay, you can put this directory anywhere, but this will just make it less work for you to run clang-format on your code and keep it organized the same way as this tutorial's code. In that directory, create a file called HelloSphere.cpp. You can copy the code from my HelloSphere.cpp file and save it. Or you can just download the file by clicking on this link and then clicking the "Raw" button on that page. I put a lot of comments in the file explaining what the code does. If you want to learn more details about how OpenGL and GLUT work, you can find plenty of documentation via online search.

In a Terminal, cd to the directory containing this HelloSphere.cpp file. Then run this command:

clang-format-10 -style=file -i HelloSphere.cpp

Congratulations! Your C++ code is now formatted according to Google's standards! Well, at least it's formatted to the standards a computer can follow automatically. Now, let's compile your code with this command:

g++ HelloSphere.cpp -o HelloSphere -L/usr/lib/x86_64-linux-gnu/ -lGL -lglut -lGLU

If it compiled with no errors or warnings, let's run the program! Run it with this command:

./HelloSphere

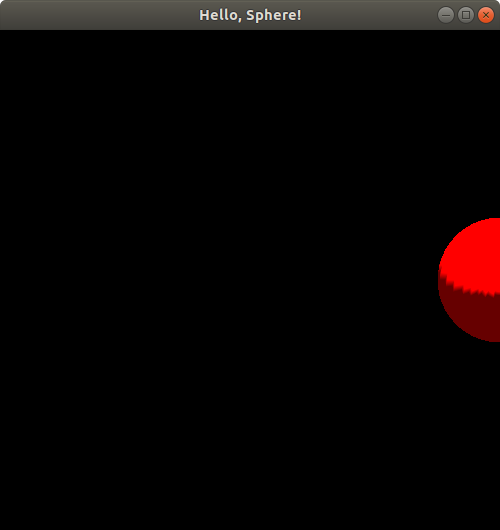

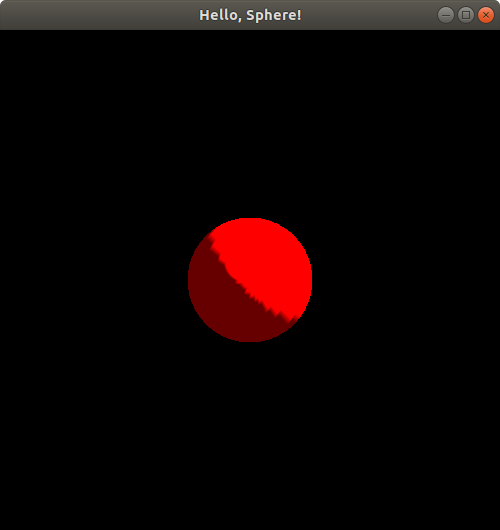

You should see a window pop up on your screen that looks something like this:

You can close the window to stop the program.

Here are a couple sources that I think illustrate nicely how to create simple OpenGL programs from scratch:

- StackOverflow example on displaying a sphere in OpenGL

- First tutorial from the OpenGL Step by Step series

It's exciting to get our first program working. But the geometry I claimed was 3D just looks like a boring red disc (filled-in circle). How can we make it look 3D?

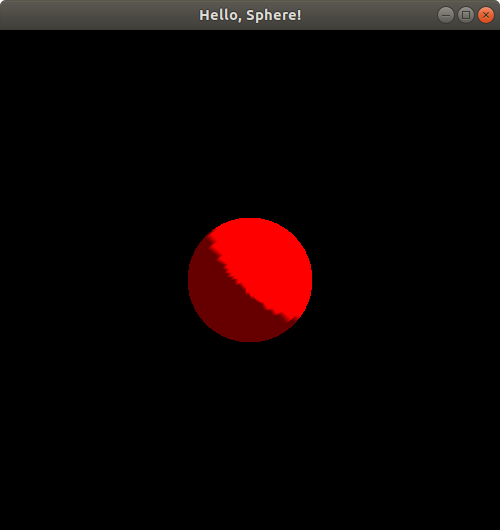

Hello, Shaded Sphere!

The 3D nature of shapes becomes more apparent when we add shading to our scene. In OpenGL, this is done by modeling the effects of light on the color of each vertex of any shape, assuming some material properties of the shape. By turning on a light, we can make our sphere "look" three-dimensional. In the same directory as the previous program (just for convenience, e.g., access to the same .clang-format file), create a new file called HelloSphereShaded.cpp. Here is my version, which you can copy or download: HelloSphereShaded.cpp.

Compile the program:

g++ HelloSphereShaded.cpp -o HelloSphereShaded -L/usr/lib/x86_64-linux-gnu/ -lGL -lglut -lGLU

Run it:

./HelloSphereShaded

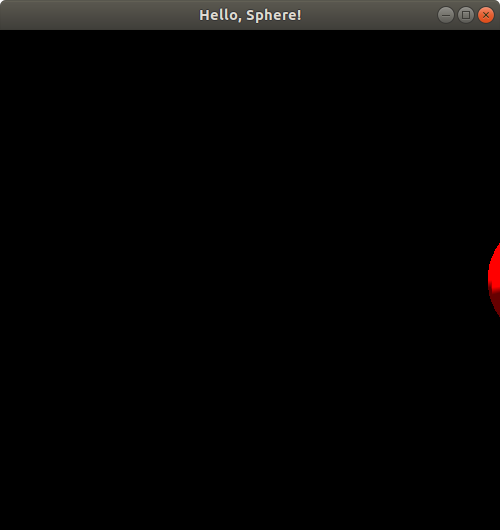

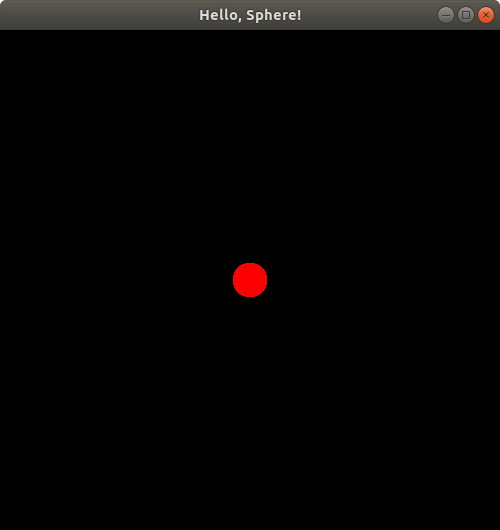

View the result:

And there you have it! Now there's some shading, revealing the 3D shape of our sphere!

To clarify exactly how the 3D coordinates of our shapes map to the 2D locations of shapes we see on the computer screen, let's do some experiments.

Positioning the Sphere

Make a new program called PositionSphere.cpp in the same directory (for convenience, you could put it in another directory if you really want). Here's my code: PositionSphere.cpp.

Compile this program:

g++ PositionSphere.cpp -o PositionSphere -L/usr/lib/x86_64-linux-gnu/ -lGL -lglut -lGLU

Run it:

./PositionSphere

The window that pops up should look exactly the same as before! Now let's change the call to putSphereCenteredAt to putSphereCenteredAt(1.0, 0.0, 0.0); and then compile and run the program using the same commands as before. Here's the result:

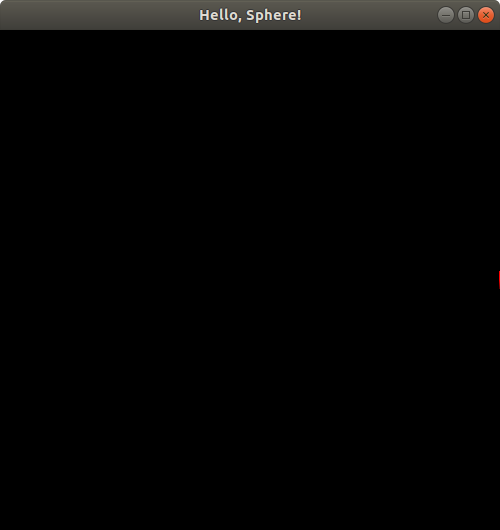

Hmm. It looks like the sphere is halfway out of our viewing window! Let's push the boundaries a bit further by changing that function call to putSphereCenteredAt(1.2, 0.0, 0.0);, compiling, and running again. Here's the result:

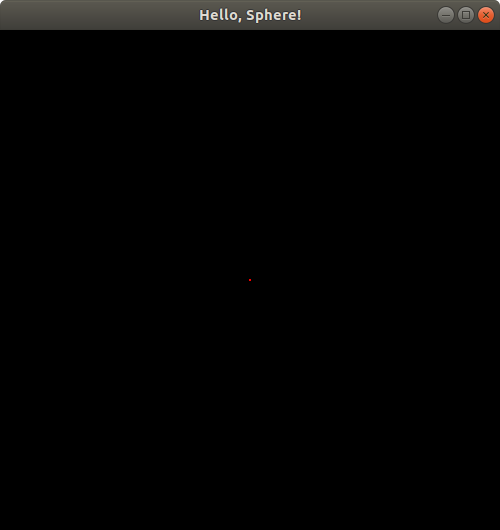

The sphere is almost gone--just a little sliver left! Now, you might notice from the code above that the radius of this sphere is 0.25. We have just moved the sphere to the right by 1.2. If the viewing window is showing us x-coordinate values ranging up to x = 1, and if the positive x-axis direction points to the right of our window, then we would expect the sphere to disappear completely when we shift it to the right (positive x direction) by 1.25 or more. Let's try putSphereCenteredAt(1.245, 0.0, 0.0);:

Sure enough, we only see a tiny sliver of the sphere remaining in our viewing window! If you're having trouble seeing it, it's just a few red pixels on the right middle end of the image above. Finally, let's try putSphereCenteredAt(1.25, 0.0, 0.0);. Sure enough, the resulting image, which I won't bother to show you here due to its extremely boring pitch black appearance, displays absolutely no part of the sphere. So, it seems like a reasonable guess, that perhaps our viewing window extends up to x = 1 on the right side.

Similar experiments will reveal that the left end of our viewing window extends to x = -1, the top end goes to y = 1, and the bottom goes to y = -1. To verify this yourself, try putting 1.245 or -1.245 into the x or y coordinates of the putSphereCenteredAt function call one at a time and observe where the little sliver of the sphere appears.

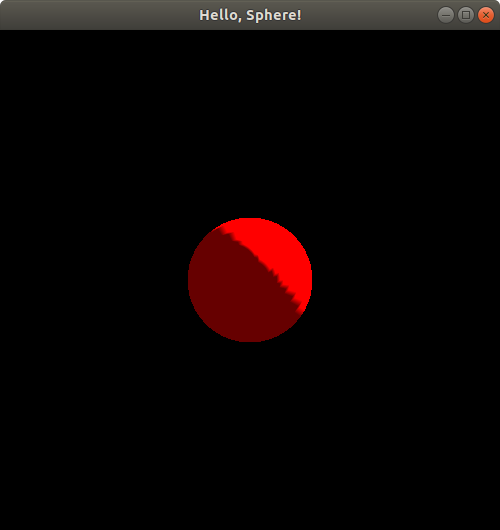

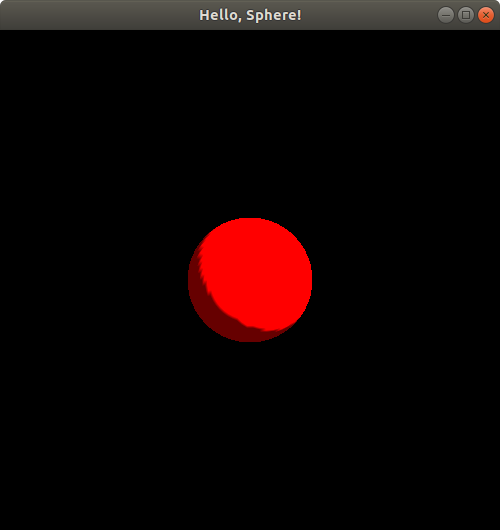

The z-coordinate is a bit odd in comparison. Let's try the same experiment, first with putSphereCenteredAt(0.0, 0.0, 0.75);:

If you compare this image to the original one from the first time we ran this program with no translation, or equivalently, the picture we got when we ran HelloSphereShaded.cpp, you may notice that the spheres look exactly the same size; the only difference between them seems to be the lighting and shading. This may seem a bit strange since the z-axis is presumably oriented somehow toward/away from us, i.e., perpendicular to the computer screen (since the x- and y-axes are both parallel to the computer screen), yet, unlike real life, bringing the sphere closer to us doesn't seem to be changing its size!

Let's continue experimenting. Let's now move the sphere to putSphereCenteredAt(0.0, 0.0, 1.0);. If you run this, you'll notice a totally blank, black window again! What happened to the sphere? Why did it disappear? If z is clamped to [-1, 1] like x and y are, should we still see half of the sphere in our viewing window when we move it to be centered at z = 1?

Let's make one other change to the code, temporarily. Let's comment out these two lines in the drawBackground() function:

// glEnable(GL_CULL_FACE); // glCullFace(GL_BACK);

Then, try running the program:

Now, we seem to see the sphere again--the lighting looks different than what we just saw earlier. Let's not analyze the lighting too much and let's keep moving the sphere further along the z-axis. Let's move it now to putSphereCenteredAt(0.0, 0.0, 1.24);:

Hmm. Looks like the sphere is starting to disappear! Let's try putSphereCenteredAt(0.0, 0.0, 1.2499);:

Just a tiny dot of the sphere left in our viewing window! Change the z-coordinate to 1.25 to verify that the sphere does indeed completely disappear from the viewing window at that location. This suggests pretty strongly that the z-coordinate does indeed extend up to z = 1 on the positive side of the z-axis (since the sphere has radius 0.25, so if it's centered at z = 1.25, the points on its surface will have z values that go as low as 1.25 - 0.25 = 1, which we can't see, and if it's centered at z = 1.2499, its points will go as low as z = 1.2499 - 0.25 = 0.9999, of which we do see a tiny bit). But is the positive z direction pointing toward us, or away from us?

If you do the exact same experiments but with negative z values (and now you can uncomment those lines of code that mentioned something about culling), you'll notice the exact same pattern. When the sphere is centered at (0.0, 0.0, -1.0), it appears to have exactly the same size as it did at z = 0.75 and at z = 0, but with just a bit of a change in its lighting and shading:

If you continue the experiment to move the sphere's center to (0.0, 0.0, -1.2499), you'll notice an identical image to when we put the sphere center at (0.0, 0.0, 1.2499), i.e., just a tiny dot in the middle of the screen is visible, and if you move the sphere center to (0.0, 0.0, -1.25), you'll see a totally blank black screen like you did when you moved the sphere center to (0.0, 0.0, 1.25). So, it appears that the negative z-coordinate also extends up to z = -1.

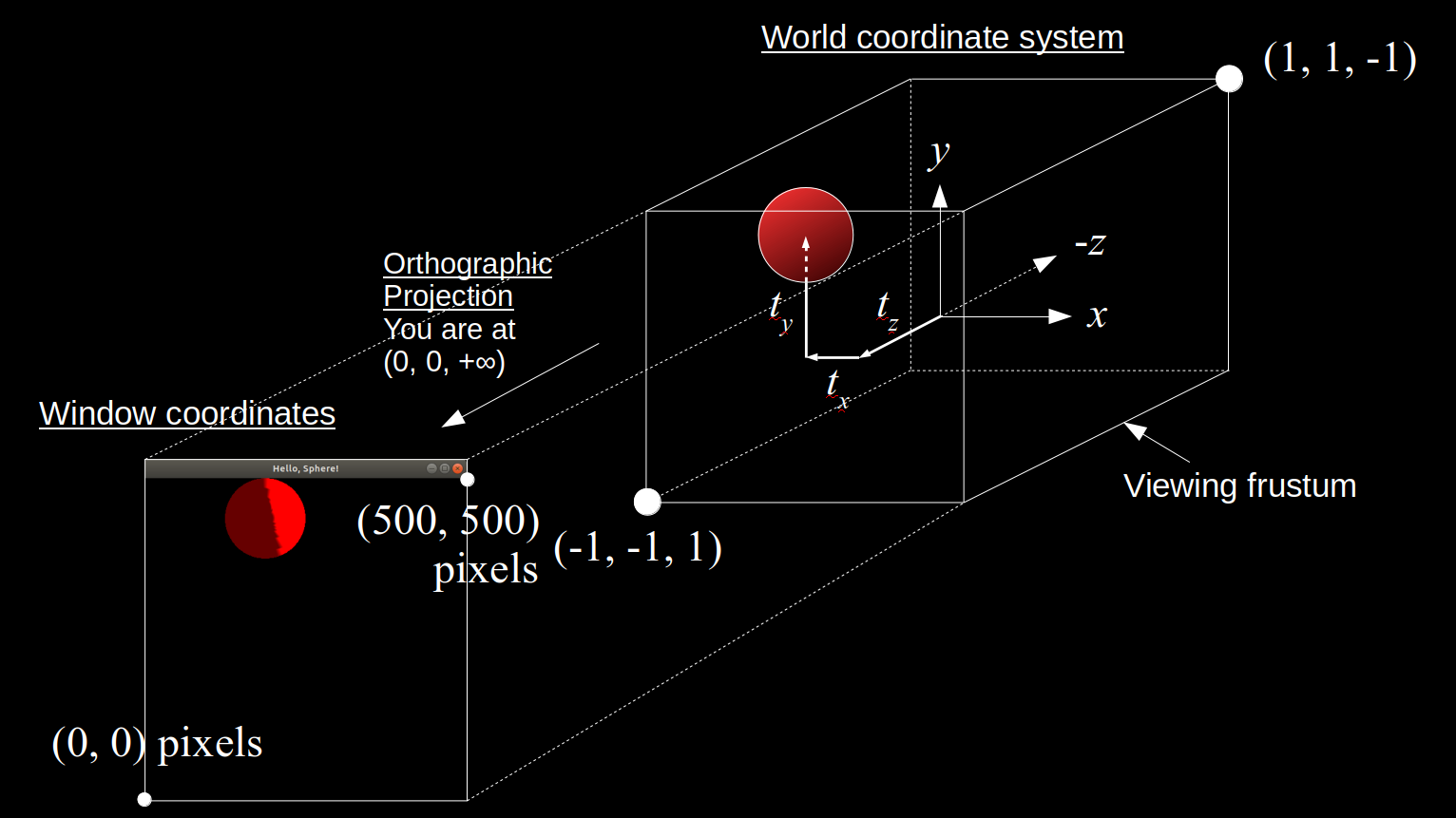

How OpenGL Maps from 3D to 2D

It still seems bizarre that with all this experimenting, we can't tell whether z increases toward us, or decreases toward us! But at least we did discover that we seem to be able to view any points that are within (-1, 1)3 ⊂ R3, i.e., a box where -1 < x, y, z < 1. This is called OpenGL's default viewing frustum. We also figured out that x increases to the right and y increases upward. Based on this, it might be a reasonable guess that z by the right-hand rule increases toward us. But how can we know for sure?

Instead of experimenting indefinitely, let's now get a more detailed understanding of exactly what OpenGL does to go from what appears to be 3D geometry, to a 2D image on our two-dimensional computer screen.

The glutSolidSphere function always generates a set of triangles that approximate the surface of a sphere that is centered at (0, 0, 0). To "move" a sphere to be centered at a location other than (0, 0, 0), we must adjust the coordinates of the vertices of the triangles approximating the sphere to be located in appropriate places such that the resulting sphere would be centered at the desired location. To handle this shifting, or translation, of the coordinates, our code calls the glTranslated function. But notice some other code around that function call: there is some pushing and popping of a matrix, and something called GL_MODELVIEW. What is all that?

OpenGL actually transforms the coordinates of any points it draws in the following way. Let (x, y, z) be an arbitrary point, e.g., a vertex on one of the triangles making up the sphere created by a call to glutSolidSphere. OpenGL represents each point in homogenous coordinates, so the point is represented with the coordinates p = (x, y, z, 1). These original coordinates for the point p are said to be in the object coordinate system or local coordinate system. The terms "local" and "object" here are referring to coordinate system whose origin is at the center of the sphere itself, regardless of where we're trying to center it. So, this is the coordinate system that is "local" to the "object," i.e., the sphere we want to draw. OpenGL represents this 4D point as a column vector (4x1 matrix) and then left-multiplies it by a 4x4 model matrix. The resulting 4x1 column vector represents the same point in the world coordinate system of OpenGL. This is the default, universal coordinate system OpenGL uses. All other coordinate systems are defined relative to this coordinate system. Note that this coordinate system isn't explicitly defined anywhere; a universal coordinate system just exists theoretically, and the only way for us to "see" it is to define at least one other coordinate system from which to view the universal/world coordinate system. Next, OpenGL left-multiplies that world-coordinate-system 4x1 column vector by a 4x4 view matrix. The resulting 4x1 column vector represents the point p's coordinates in what we call the view coordinate system. Next, OpenGL left-multiplies this 4x1 vector by yet another 4x4 matrix called the projection matrix, yielding a 4x1 vector of coordinates for the point p in the clip coordinate system. At this point, OpenGL "clips" or removes all points that lie outside of the viewing frustum. In this case, we're using OpenGL's default viewing frustum where the x, y, and z coordinates must lie within (-1, 1). After clipping, OpenGL normalizes the coordinates of all remaining points so that x, y, and z lie within (-1, 1). By default, the clip coordinates are already in that range as we just said, but OpenGL does allow you to change the clip coordinates to be in a different range. But when OpenGL normalizes the clip coordinates into the normalized device coordinate (NDC) system, the coordinates must all lie within (-1, 1) regardless of what range the clip coordinates cover. Finally, the NDC coordinates are transformed so that -1 < xndc < 1 covers 0 < xwindow < w, where w is the width of the viewing window specified in the code above, -1 < yndc < 1 covers 0 < ywindow < h, where h is the height of the viewing window, and -1 < zndc < 1 covers 0 < zwindow < 1. It is possible to change these ranges in OpenGL, but what we described here is the default behavior of OpenGL.

Let's denote the model matrix by Mmodel, the view matrix by Mview, and the projection matrix by Mproj. Then the clip coordinates, pclip, of the point p in object coordinates is pclip = Mproj · Mview · Mmodel · p. Let w = h = 500 pixels, as specified in our code above for the window size.

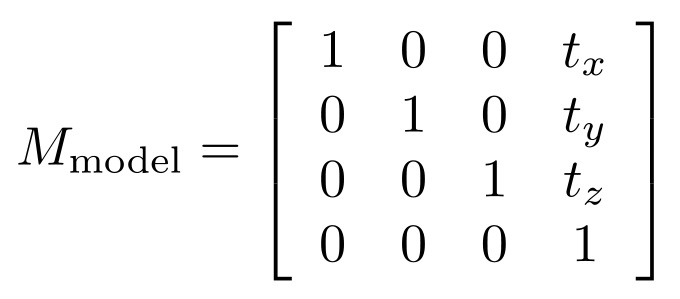

In our HelloShadedSphere.cpp and original PositionSphere.cpp programs, when we left the sphere centered at the origin of the world coordinate system, our model matrix, Mmodel, was the 4x4 identity matrix. Later when we started translating the sphere away from being centered at the origin, that translation amount, (tx, ty, tz) (e.g., once we did tx = ty = 0 and tz = 1.2499), was included in the model matrix. That is:

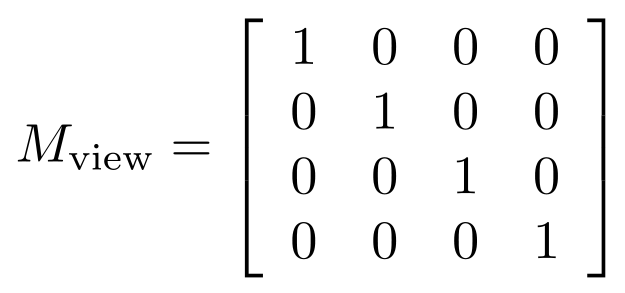

The view matrix is what represents the position and orientaton of the "camera" of OpenGL. By default, this is the identity matrix. This effectively makes us view all the objects in our OpenGL scene, by default, by having our camera eye located at (0, 0, +∞), while looking in the negative z direction. You'll see other sources saying the camera eye is effectively at the origin, (0, 0, 0), but the projection we describe below actually makes the concept of the eye being anywhere near the scene seem nonsensical. So, by default,

,

,

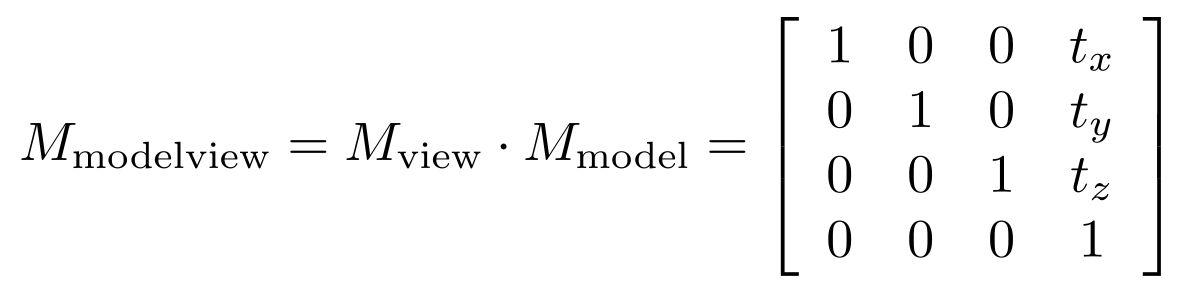

but if you look at our code above, you'll notice that the model matrix and any view matrix are all combined into a single stack of matrices OpenGL calls the GL_MODELVIEW matrix mode. So, OpenGL actually combines, at any point in the code, model and view matrices into a single matrix that gets applied to all object-space vertex coordinates to obtain view coordinates. In our case,

.

.

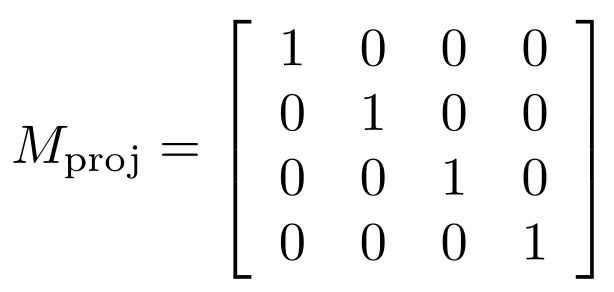

By default, in OpenGL, Mproj is the 4x4 identity matrix, which represents what is called an orthographic projection: it's like having a camera located infinitely far away from the origin, which lacks any notion of perspective; everything looks just as close to us as everything else since everything is, basically, infinitely far away from us. It's kind of like how we can't tell which stars in the sky are closer or farther away from us just by looking at them, even though we can judge how close a basketball might be to us if it's 1 meter vs. 10 meters away. With the default orthographic projection in OpenGL, it's like everything is a star that's infinitely far away. OpenGL applies the projection matrix typically on another matrix stack called GL_PROJECTION, which is not mentioned in our code since we just used the default projection matrix. If we wanted to change the camera's behavior, we could do so explicitly on the GL_PROJECTION matrix stack in our code. So:

Combining all of this, we see that pclip = Mproj · Mview · Mmodel · p = (x + tx, y + ty, z + tz), i.e., the result of the projection and modelview matrices, together, is just to translate all object-space coordinates of all points by (tx, ty, tz). After applying all of these transformations, OpenGL will clip any points that are outside of the viewing frustum, like we saw earlier in all the examples where parts of the sphere were cut off from appearing in the viewing window.

Since our clip coordinates are already normalized by default, pclip also represents the normalized device coordinates (NDC) of all points: pndc = pclip. Finally, the NDC coordinates are transformed to window coordinates by scaling and shifting the NDC values to get the x-coordinates to be within 0 to w, the y-coordinates to be within 0 to h, and the z-coordinates to be within 0 to 1. This is accomplished by setting xwindow = (w/2)(xndc + 1) pixels, ywindow = (h/2)(yndc + 1) pixels, and zwindow = (1/2)(zndc + 1). You can verify that xndc = -1 gets mapped by this transformation to xwindow = 0 and xndc = 1 gets mapped to xwindow = w = 500, so this is how the 3D object coordinates end up getting mapped to 3D window coordinates.

Finally, what we see on our 2D screen is the result of taking the 3D window coordinates and doing a depth test, as we asked OpenGL to do with in our code with the function call glEnable(GL_DEPTH_TEST);. So, this is how OpenGL makes sure whatever we see on our 2D screen is whatever is closest to us in the 3D scene we defined, much like a real-life camera's 2D image displays what the camera can see in a 3D real-world scene.

All of these coordinate system shenanigans were to help us understand exactly what we see on the screen, and how what we see in pixels maps to the original 3D coordinates of the objects we constructed. This insight will come in handy as we start moving objects around on the screen during animations of physics-based simulations! Here is a diagram summarizing the overall default transformation from world coordinates to window coordinates in OpenGL:

For more details and insights on how OpenGL handles coordinate transformations read these sources:

- Official OpenGL documentation on viewing and transformations

- LearnOpenGL article on coordinate transformations

- Detailed description and derivation of OpenGL transformations

- Stack Overflow post and answer on orthographic projections

- OpenGL matrix modes and order of projection and modelview matrix multiplications

Now that we understand, in detail, how our 3D world scene maps to our 2D window, we can proceed to implementing animations of physics-based simulations using OpenGL. Note that with our current setup, the 2D location of the center of a sphere in the window on our computer screen accurately shows the location of the sphere's center in the xy-plane in the world coordinate system, since the orthographic projection does not distort this 2D location in any way.

A Massless, Sizeless Particle

We'll go through a few steps to describe each physics-based simulation in each tutorial:

- Describe a mathematical model of the physical system we want to simulate.

- Design and implement that mathematical model in a computer program.

- Run the program and view the results.

Mathematical Model of a Massless, Sizeless Particle

Like Dr. Adam Bargteil and Dr. Tamar Shinar's course on physics-based animation from SIGGRAPH 2018 and 2019, we begin by considering a very simple object to simulate: a theoretical particle that is infinitely small and has no mass. You can't see it. You can't feel it. It's almost as if it isn't there at all. Oh, but it is there, if we define it to be: an infinitely small dot, moving around in space, or sitting still in space, that defies all of our senses. In a nutshell, a particle, p, is nothing but a vector function xp(t) of time t representing the position of the particle at any given time. We can think of a simulation of a massless, sizeless particle as just us sitting there staring at a sequence of (x, y, z) coordinates changing over a period of time, scrolling by on our computer screens before our eyes. Here is a more formal definition.

Definition. Let T be a closed interval in the set of real numbers, R. Each element of T is called a time or instant of time. A particle, p, is a function xp: T → R3 that assigns to every time t ∈ T a position, xp(t) = (x(t), y(t), z(t)), in 3D space, where x, y, z: T → R are functions representing the coordinates in 3D space of the particle p at each time, t.

We'll refrain from formally defining what a simulation of a massless, sizeless particle is (even though we will basically do it here, but note the details of this "definition" will change as we deal with different systems in this tutorial), but you can think of it simply as a discrete sampling of the position function xp(t) at specific instants in time. That is, if we pick a strictly increasing sequence of times t0, t1, ..., tN from our time interval T, then a simulation stepping through those values of time is just a sequence of positions in 3D space: xp(t0) = (x(t0), y(t0), z(t0)), xp(t1) = (x(t1), y(t1), z(t1)), ..., xp(tN) = (x(tN), y(tN), z(tN)), representing the position of the particle at each of the time instants in the sequence t0, t1, ..., tN. For other systems that are not just a single practically-nonexistent particle, a simulation could be defined in the same way, but the state of the system may not just be a sequence of positions. The state could also include velocities and other values. A simulation could thus generally be defined as a sequence of states, where the state would have to be defined explicitly for any given system we simulate.

Simulating a Massless, Sizeless Particle

Despite a massless, sizeless particle essentially being an invisible object, we will represent such a particle conceptually as a sphere with a nonzero radius so we can, well, actually see it as it moves around on the screen. Yes, the sphere is just a visual aid for us to see where the particle is located in space as it moves. The particle itself, according to our theoretical model here, is infinitely small. We don't really need to assume the particle is infinitely small, but I just do it anyway since we haven't yet defined a particle to be anything other than a point moving around in space over time. It'll make more sense to talk about particle-like objects that have some nonzero size when we start looking at objects interacting with other things in the scene.

Let's start by making a sphere move around the window. The infinitely small point at the center of the sphere will represent a theoretical massless, sizeless particle's position as it moves around the window. Let's make a particle whose x and y coordinates change as a function of time. Let's have the particle's position (i.e., the position of the center of the sphere representing the particle) be at x(t) = (x(t), y(t), 0) where x(t) = 0.5 cos t and y(t) = 0.5 sin t, where t is the amount of time, measured in seconds, that the program has been running. Basically, this will cause the particle to move counterclockwise along a circle of radius 0.5 about the origin of the coordinate system shown above. Here is the code, in a new file called MovingParticle.cpp.

Compile the program:

g++ MovingParticle.cpp -o MovingParticle -L/usr/lib/x86_64-linux-gnu/ -lGL -lglut -lGLU

And run it:

./MovingParticle

Observe the resulting animation. You will see a red sphere moving around the window in a circle! Here is my animation. Ignore the slight jump in the animation as it loops. My animated gif recording program only allows integer amounts of seconds as the duration of the recording, leading to the jump:

We just completed our first simulation! We specified a way for the particle to move as time passes and then implemented a program that animates a sphere following that movement!

Mathematical Model of a Massless, Sizeless Particle in a Velocity Field

But okay, this doesn't seem very "physics-based," does it? Let's step slightly further into physics by defining a simple concept.

Definition. If a particle's position function x(t) is differentiable with respect to time on an open interval of R that contains T, then the velocity of the particle is the time-derivative, x'(t), of the position function.

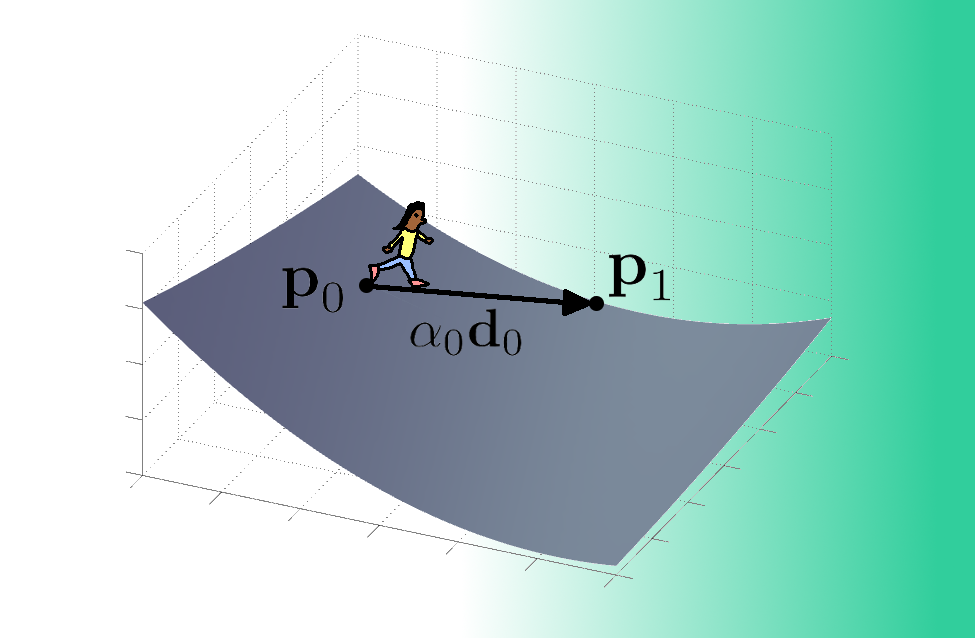

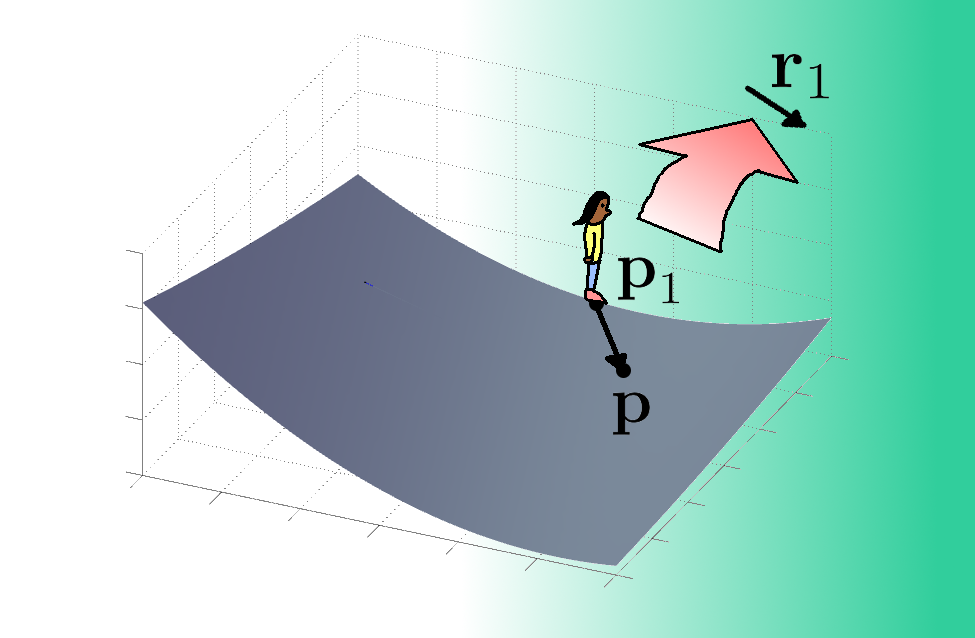

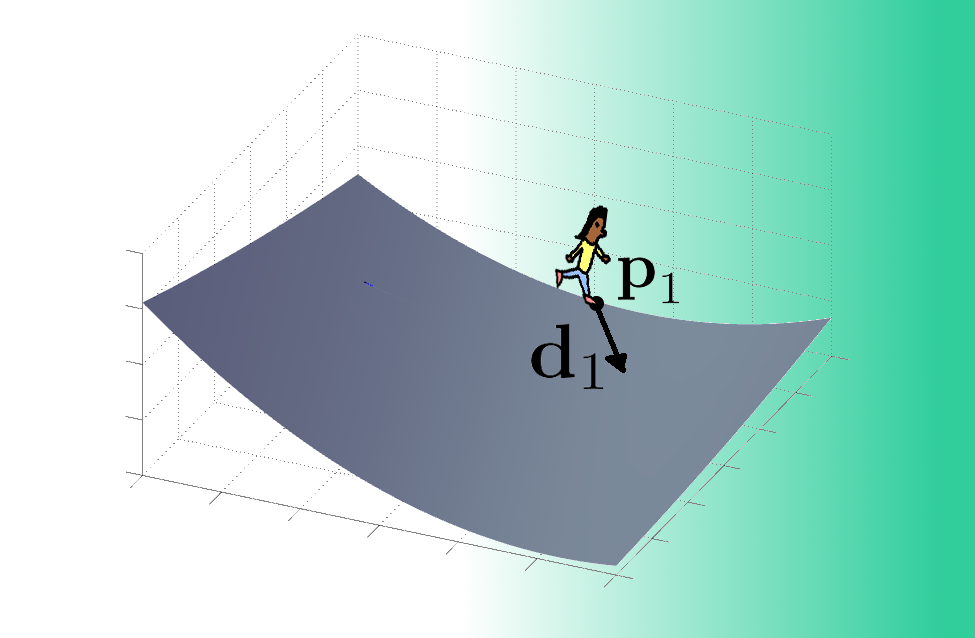

To step toward something more physically-driven, let's now imagine our particle is actually floating around in the air, pushed around by a very simplistic mathematical model of wind. Specifically, let's imagine the wind is described by a velocity field, meaning there's a specific wind velocity vector given to us for any given point in space, at any given time instant. In this case, let's imagine our wind velocity vector is defined by the function u(x, t) = u(x, y, z, t) = (u(x, y, z, t), v(x, y, z, t), w(x, y, z, t)), where u(x, y, z, t) = -(x - 0.5 cos (t2) cos t), v(x, y, z, t) = -(y - 0.5 cos (t2) sin t), and w(x, y, z, t) = 0. Following the lead of the Bargteil and Shinar course notes, we will have our particle start at a specified initial position x(t) when t = 0, in this case x(0) = (0, 0, 0), and then keep updating the position of the particle by Forward Euler integration: x(t + Δt) = x(t) + Δt · u(x(t), t). That is, the new position of the particle after stepping forward in time by an amount Δt (the time from one call to RenderScene() to the next) will be the particle's current position plus the time increment amount, Δt, times the velocity vector at the particle's current position at the current time. Note that this time stepping scheme is slightly more sophisticated than the simplest Forward Euler integration scheme, since we let the computer calculate Δt each time it calls RenderScene(), and since the computer may take slightly different amounts of time between each call to RenderScene(), Δt could vary when the program is running. And sorry for mentioning the name of a function from the code in the mathematical modeling section of the text here; I just wanted to clarify how the concept of the time step, also known as the time increment, relates to the code we've seen so far.

Simulating a Massless, Sizeless Particle in a Velocity Field

Here is the code, in a file I called VelocityParticle.cpp.

Compile:

g++ VelocityParticle.cpp -o VelocityParticle -L/usr/lib/x86_64-linux-gnu/ -lGL -lglut -lGLU

Run:

./VelocityParticle

Observe the resulting animation. It's a rather interesting animation since it looks like something "physics-based" is going on, though it's hard to tell exactly what. Rather than a particle "floating" in the wind, the animation looks more like a particle attached to a few rubber bands being pulled in various directions, eventually oscillating faster and faster as it is pulled toward the center of the window. Here is a looping animation of roughly the first 15 seconds of the animation, minus a little bit at the very beginning:

This draws our attention to the fact that it's pretty challenging and unintuitive to try to manually specify velocity fields, even with some cleverly crafted formulas, that actually produce a realistic, physics-based effect that matches our vision for the system we want to model and simulate. As Bargteil and Shinar do in their course notes, we shall now proceed to the next level of physical realism in our mathematical modeling of a particle.

Notice how the code above has the keyword static thrown around in a few places. This is not great programming style or structure! It suggests we're hacking our way through an important responsibility: keeping track of the state of our program, namely, the particle's position and the elapsed time. We need to delegate this responsibility to an object that will stay stored in memory between subsequent calls to RenderScene(). Let's make an improved version of this program in a file called VelocityParticleBetter.cpp.

While we got rid of the use of the static keyword, this code does still have a global ParticleSimulator* variable. To ensure it is used properly, I made a global RenderScene() function that checks that this variable has been initialized. Since GLUT requires that we use some static or global function as the display and idle functions, we have limited simple options for fully encapsulating all of our simulation and rendering into the classes. In this improved solution, we have better defined the jobs the program does by delegating them to different classes: the Particle encapsulates the storing and updating of a particle's position while the ParticleSimulator class does the job of advancing the simulation through time and directing its Particle to update and redraw itself as time elapses.

A Particle with Mass

We will now step into Newtonian mechanics, where we add the concepts of mass, force, and acceleration to create more physically realistic particle simulations.

Mathematical Model of a Particle with Mass

Definition. A particle with mass is a particle together with a strictly positive real number, m, called its mass.

This defintiion may seem meaningless since we didn't really define what "mass" means. In fact, we won't define mass directly other than that it is a positive real number. We will make it useful though, by describing the governing axioms (assumptions or postulates) of Newtonian mechanics: namely, Newton's Laws of Motion. We will start with just Newton's Second Law. Later we'll add the Third Law. The First Law is actually a theorem that follows directly from the Second Law. Before stating Newton's Second Law, we need to define a concept and then make an assumption.

Definition. A nonempty set of vectors in R3 called forces acting on a particle can be summed to produce a net force acting on the particle.

And now the assumption.

Axiom. The position function of a particle, x(t), is assumed to be twice differentiable with respect to time at all times t in some open interval of R that contains T.

Now we can define the concept of acceleration.

Definition. The acceleration of a particle is the second time derivative of the particle's position. That is, if x(t) is the position of the particle at time t, then the acceleration of the particle at time t is x''(t).

Now that we assumed we can calculate an acceleration for any time value we encounter during a simulation, we can safely state Newton's Second Law.

Axiom (Newton's Second Law of Motion). The net force, f ∈ R3, acting on a particle is equal to the particle's mass, m, times the particle's acceleration. That is, f = mx''(t).

Newton's Second Law is based on a couple of particularly powerful realizations about nature:

- The motion of objects is governed by a concept called "force," and these forces are additive in nature; that is, any set of forces acting on an object, such as its weight and how hard we push it, can just be added together into a net force, which then governs how the object accelerates.

- The constant of proportionality relating the net force acting on an object to the object's acceleration, i.e., the object's mass, is itself a description of how much matter the object contains.

Newton's Second Law is the magic that makes physics what it is: it gives us the recipe for dynamics: relating forces acting on objects (kinetics) to motion of those objects (kinematics).

Newton's First Law, while commonly stated as another axiom of Newtonian mechanics, is actually a consequence of the Second Law.

Theorem (Newton's First Law of Motion). A particle with a net force of zero (0 ∈ R3) acting on it maintains a constant velocity at all times.

Proof: Since the net force f acting on the particle is zero, we know by Newton's Second Law that 0 = f = mx''(t). Since we defined the mass of a particle as being strictly positive, mx''(t) = 0 means that x''(t) = 0 for all times t ∈ T. Taking the antiderivative of this equation with respect to time yields x'(t) = C for some constant C ∈ R that doesn't vary with time, for all t ∈ R. Since x'(t) is the velocity of the particle, we have proved that the particle's velocity stays constant with time. This completes the proof. ■

Notably, if the initial velocity of a particle experiencing zero net force was zero, the particle will remain at velocity zero. Otherwise it will continue moving at the same constant velocity at all times. This explains a commonly stated version of Newton's First Law, that a particle at rest will remain at rest and a particle in motion will remain in motion at a constant velocity unless acted on by an outside force (which must result in a net nonzero force acting on the particle).

Simulating a Particle with Mass

Here is an implementation of the model described above, in a file I called MassParticleGravity.cpp.

The force is a constant gravitational force; it does not change with time or space. Our code has slightly changed its integration scheme from forward Euler to symplectic Euler, as described in Bargteil and Shinar's course notes, now that we are dealing with two levels of integration: one to go from force and acceleration to velocity and another to go from velocity to position.

You may find it a little odd that we structured our code to create and return new instances of Vector2D and Point2D from our MakeUpdatedVelocity and MakeUpdatedPosition functions, respectively. Isn't it inefficient to create and return new objects instead of just modifying one existing object directly? Well, it turns out that return value optimization, which we describe later in this tutorial, is a common optimization of code applied by C++ compilers. This eliminates some of the unnecessary copying of values that are simply being moved from a return value to a variable receiving the return value, for example. But, you may wonder, why rely on compiler optimizations? Why not just pass a Particle's velocity or position as pointers (pass-by-reference is problematic since if the reference refers to something that's not stored in computer memory, the function has no way of verifying that) to the functions to alter their values without any copying? It turns out there are advantages to stateless functions, i.e., functions that, in the function body, do not alter any value outside of the function's scope. We'll describe more on these advantages later. Notice that this stateless function approach also makes the code read more like mathematical equations: one line of code updates the velocity and another line of code uses that velocity to update the position.

That said, there are scenarios where return value optimization is not applied by C++ compilers. For instance, when returning a named variable or returning different named objects from different code paths compilers may or may not apply return value optimization by default, or at all.

Here is the animation that results from this physics-based simulation of a projectile subject to the Moon's gravitational force:

Mathematical Model of Particles with Mass, Size, and Multiple Forces

Much of physics-based simulation follows, or tries to follow conservation laws, which we shall consider axioms of many of our mathematical models going forward. However, some of these conservation laws are actually theorems that themselves follow from Noether's Theorem about symmetries in space and time, but we will not go that deeply into theoretical physics in this tutorial.

Axiom (Law of Conservation of Mass). The total mass of a system is constant.

Axiom (Law of Conservation of Linear Momentum). The total linear momentum of a system is constant.

Axiom (Law Conservation of Energy). The total energy of a system is constant.

We haven't defined energy here yet. In fact, its exact definition may vary from one system to another. However, conservation of mechanical energy will come in handy when we try to handle colliding particles. By "system" here we mean what is typically called a "closed system" that does not gain or lose any matter or energy. A conservation law we did not state here, since we're not using it just yet, is the law of conservation of angular momentum.

Theorem (Newton's Third Law of Motion). In a system consisting of two particles with mass, when the two particles collide with each other (assuming an instantaneous, elastic collision, i.e., with no loss of energy to deformation of a particle or to heat), the force applied by the first particle on the second particle (the action force) is equal in magnitude and opposite in direction to the force applied by the second particle on the first particle (the reaction force).

Proof: Let the mass of the first particle be m1, the mass of the second particle be m2, the velocity of the first particle before the collision be u1, and the velocity of the second particle before the collision be u2. Then the total momentum of the system is m1u1 + m2u2. Let the corresponding velocities of the particles immediately after the collision be u'1 and u'2. Then by the law of conservation of linear momentum, m1u1 + m2u2 = m1u'1 + m2u'2. Then m1(u'1 - u1) = -m2(u'2 - u2). Since we're assuming this is an instantaneous (but differentiable) change in momentum, we can rewrite this equation as just dp1/dt = -dp2/dt, where p1 is the momentum of the first particle as a function of time and p2 is the momentum of the second particle. But by Newton's Second Law, instantaneous change in momentum, p, for any particle with velocity u, acceleration a, and mass m, is dp/dt = d(mu)/dt = ma = f, where f is the force acting on the particle. So, dp1/dt = f1, where f1 is the action force on the first particle, and dp2/dt = f2, where f2 is the reaction force on the second particle, and the above equation shows f1 = -f2, i.e., the action and reaction are exact opposite vectors. ■

We'll use another related fact in our simulation.

Theorem (Impulse-Momentum Theorem). The change in momentum of each particle in a two-particle elastic collision scenario like that described above is equal to the integral of the force applied to the particle during the collision calculated over the time duration of the collision.

Proof: Integrate Newton's Second Law as shown here. ■

In practice, we'll use this theorem to approximate an average impulse force that results from the change in momentum of a particle due to a collision that we assume will occur over the duration Δt of the simulation for any given time step. So yes, we will assume that the collision is not really instantaneous, but rather occurs during a single time step of our simulation, which is as instantaneous as things ever really get in computer simulations.

The laws of conservation of linear momentum and energy above (specifically kinetic energy in our example below which lacks gravity and thus potential energy) can be used to derive formulas for the change in momentum of each particle upon an elastic collision (no loss of energy or momentum) with another particle, which by the impulse force approximation mentioned above, will yield collision forces we can use to simulate a box of particles elastically colliding with each other.

Simulating Colliding Particles with Mass and Size

Now we shall implement a simulation of multiple particles, without gravity involved, bouncing around inside an imaginary box whose walls are the top, bottom, left, and right walls of our viewing frustum. The particles have a nonzero radius so they behave like balls; the sphere representing each particle is no longer just a visual aid. The particles can also collide, elastically, with each other.

The implementation structure builds on the previous example, but note that we had to restructure it a bit so that all forces are computed first, entirely outside of the Particle class, and only then do we update all the particle positions. Here is my code in a file called CollidingParticles.cpp.

Here are the first 15 seconds or so of a version of this simulation I created earlier that starts the simulation time at exactly 0.0 seconds instead of the current time from GLUT:

If you play with the parameters of this simulation like changing the masses or initial velocities or positions of the particles, you may see some weird behaviors, like a particle getting stuck slightly dangling outside the box, or one particle getting "stuck" inside another particle. If you try to damp the collisions a bit, say, by having the impulse force be 0.99 times what we compute now, you'll see other bizarre behaviors, like the wall not applying enough force to keep a particle inside the box! Simulating what should just be a simple series of elastic collisions in essentially a 2D simulation (there is a third dimension, we're just not moving anything or applying any forces in that direction) is not as simple as it may seem to get into a fully realistic state!

Note the order of certain computations in the code above is important. In each time step, we must first zero out the force accumulators of each particle. Then, compute the forces on particles due to collisions with other particles and/or a wall of the viewing frustum. Once these forces have been applied to each particle, then we update the velocity followed by the position of each particle. In more complex future simulations, the order of certain computations will be important to ensure a more accurate simulation.

Fluid Mechanics

All the simulations we implemented above used Newtonian mechanics, which describes the motion of theoretical particles that follow Newton's laws of motion. Newtonian mechanics also models the dynamics of real objects that approximately behave like particles, such as planets orbiting around the Sun. But how would we apply Newtonian mechanics to fluids, which don't really look like particles at all?

It turns out, fluid mechanics is not that different from Newtonian mechanics! It's just that we have to adapt Newtonian mechanics to form a mathematical model of a fluid's properties. The key idea in Newtonian mechanics is F = ma: that is, the sum of all forces acting on a particle equals the amount of matter in the particle times the particle's acceleration. The exact same idea will apply to fluids!

Fluid Particles

In fluid mechanics, we model fluid mathematically as a collection of "fluid particles," also known as fluid "parcels" or "volume elements." The term "parcel" makes sense when you see diagrams of fluid particles being drawn as boxes (rectangular prisms or cubes) rather than the spheres you often see for Newtonian particles. Like Newtonian particles, fluid particles are also considered to be infinitely small in theory, occupying just as much space (i.e., none) as a zero-dimensional point in R3. However, we also assume that a fluid particle is large enough to contain enough molecules of fluid that they have properties that would normally only make sense for a finite-sized box of fluid, such as density and pressure. This might seem strange: how can a fluid particle be infinitely small, yet contain a ton of molecules of fluid? It turns out this is just a theoretical idea, but it's been shown to be an accurate enough approximation of actual fluid motion despite its strangeness. This is strangeness is called the continuum assumption, i.e., that even though we know a fluid is made up of discrete molecules, we assume a fluid is actually "continuous" in that functions of space and time like density and pressure are continuous and even differentiable, which would never be possible for any matter made up of purely discrete objects.

Newton's Momentum Equation

Fluid mechanics is really just about applying Newton's second law and the law of conservation of mass to fluid particles. Now, in theory we could try to simulate a fluid as just a bunch of Newtonian particles colliding against each other as they float through space. But, this makes it hard and inefficient to really model a fluid's behavior: after all, our computers cannot take the colliding particles simulation we did above and simply extend it to an infinite number of particles; computers only have finite memory and computing resources. Instead, we add some richness to the types of forces acting on fluid particles to upgrade them from just being Newtonian particles that only feel gravitational and collision forces. As we'll soon describe, fluid particles will still feel gravity, like Newtonian particles. Fluid particles will also feel forces due to something called pressure and something else called viscosity.

A fluid particle will still be subject to Newton's second law: F = ma. The sum of forces acting on the particle will equal its mass times its acceleration. The forces acting on fluid particles, fall into two categories: external body forces, like those Newtonian particles feel, and internal forces, which are unique to fluid particles. Also, since momentum is p = mv, the time-derivative of momentum is F = dp/dt = d/dt[mv] = m · dv/dt = ma. In other words, Newton's second law is just the time-derivative of the definition of momentum, and thus, we can think of Newton's second law as "Newton's momentum equation." You'll see soon why I mentioned this!

As described in the SIGGRAPH course notes put together by Dr. Rook Bridson and Dr. Matthias Müller-Fischer as well as Dr. Bridson's book on fluid simulation, fluid flow is modeled mathematically by two equations: the Navier-Stokes momentum equation (which is just Newton's momentum equation or Newton's second law, applied to fluid particles) and the incompressibility condition (which results from the law of conservation of mass, as we'll soon see). Let's build up those equations in steps so we can really understand them.

Gravitational Force

The forces acting on a fluid particle are split into two categories: external and internal. The external forces are often just gravity, but can also include things like the forces resulting from you holding a container of milk and shaking it. For our simulations in this tutorial, the only external force acting on a fluid particle will be gravitational force, which is just the particle's weight: mg, where m is the particle's mass and g is acceleration due to gravity.

Pressure Gradient Force

Okay, now let's focus on the internal forces. Imagine you're standing next to a bucket of water. You dip your hand into the water with your palm wide open and flat, pushing the water with your palm. When you push things like this, we say you're "applying pressure" to it. Instead, if you were to take your hand out of the water and just run your fingertip across the top of the water, swiping its surface, any slight force you feel on your skin from the water is more like a rubbing force, which we call friction, or in the case of fluids, viscosity (gooiness or stickiness), of the water. The difference between applying pressure and feeling viscosity has to do with the direction of force application on the fluid. To think of another example, if you squish an air-filled balloon, you're applying pressure to it, but if you rub the surface of the balloon against something, you're applying friction. In physics, these two orthogonal directions of applying force to materials is called "normal stress" ("normal" meaning "perpendicular" to some theoretical plane tangent to a fluid surface) and "shear stress" from rubbing or scraping parallel to a fluid surface.

To describe these internal forces from pressure and viscosity, let's first assume we are given some functions of space and time to describe the fluid. Let's assume there is some function u(x, y, z, t) representing the velocity of the fluid at (x, y, z) ∈ R3 at time t ∈ R. u is called a vector field since it assigns a vector to each point in space. Let's assume the function u is at least twice differentiable in space (i.e., we can compute at least a second derivative, if not higher-order derivative, of u with respect to x, y, and z) and at least once differentiable with respect to time. Let's also assume we have a function p(x, y, z, t) assigning a fluid pressure to each point in space at any given time and another function ρ(x, y, z, t) assigning a fluid density to each point in space at any given time. Let's assume these functions, which are "scalar fields" since they assign a real number to each point in space at any given time, are at least once-differentiable with respect to each spatial and time variable.

The force a fluid particle feels due to pressure is caused by the infinitesimal difference in pressure around it, also known as the pressure gradient, ∇p, which is just the vector of spatial partial derivatives of pressure, (∂p/∂x, ∂p/∂y, ∂p/∂z). Evaluating each partial derivative at the particle's (x, y, z) position yields an actual 3D vector indicating the direction of most rapid increase in pressure from the particle's viewpoint. ∇p is a vector field or function, so it doesn't evaluate to a single 3D vector until you give it a specific point in space at which it shall be evaluated. As described in the SIGGRAPH course notes put together by Dr. Rook Bridson and Dr. Matthias Müller-Fischer as well as Dr. Bridson's book on fluid simulation, the force due to a pressure gradient is always directed from high pressure to low pressure, i.e., -∇p. Pressure in physics is defined as force per unit area, and the gradient of pressure has units of pressure per unit distance since each partial derivative is the limit of a pressure divided by a distance, so the pressure gradient has units of force per unit area per unit distance, which is force per unit volume. So, to get a force from the pressure gradient, we must multiply it by a volume in some way. Let's just pretend for now that we have a volume, V, for the fluid particle. Wait, isn't the volume of a particle zero? Well, yes and no, it's infinitesimal, but we'll be getting rid of this term soon, so don't worry. So, the pressure gradient force, the force a fluid particle feels due to fluid pressure differences, is represented by the vector field -V∇p. As we shall see later, the purpose of the pressure gradient force is to help the fluid conserve its mass by conserving its volume in any given region of space. The pressure gradient force models the internal forces particles place on each other by pushing directly on each other, like the normal stress we mentioned earlier. The pressure gradient force is kind of like the human tendency to move away from a crowded area into a more open space.

Viscous Force

The other internal fluid force is the force due to viscosity, or viscous force, which is proportional to the Laplacian of the fluid velocity, which is the divergence of the gradient of the fluid velocity, ∇ · ∇u, often written as ∇2u. As explained in the SIGGRAPH course notes put together by Dr. Rook Bridson and Dr. Matthias Müller-Fischer as well as Dr. Bridson's book on fluid simulation, the Laplacian of the fluid velocity is a scalar field that measures the average velocity of the particles neighboring a given fluid particle's location. The viscous force term is typically written as Vμ∇2u, with V again being the weird concept of particle volume and with μ being known as the coefficient of dynamic viscosity. This viscous force measures the force due to friction between fluid particles as they swim past each other, like the shear stress we mentioned earlier. I think of the viscous force term as the "keeping up with the Joneses" term, since viscosity is like the human tendency to keep up with your peers, but not go too much slower or too much faster than them.

Newton's Second Law for a Fluid Particle

So, a fluid particle's mass times its acceleration gives us the sum of the three forces we mentioned above: gravitational force, pressure gradient force, and viscous force! This gives us the equivalent of Newton's second law, or Newton's momentum equation, applied to fluids:

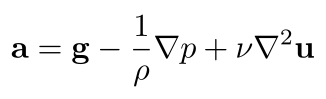

We call this the Navier-Stokes momentum equation. It says that for any fluid particle in our model, its mass times its acceleration equals its weight, plus its pressure gradient force (the minus sign is because the pressure gradient vector points from low to high pressure, while the pressure gradient force pushes particles from high to low pressure), plus its viscous force. Now, we mentioned that volume is a strange quantity to apply to fluid particles. Let's divide the whole equation by the particle's mass, which we assume is strictly positive (i.e., we're not dividing by zero!), and note that density is ρ = m/V, and thus V/m is 1/ρ:

Let's also replace μ/ρ with a new symbol, known as the coefficient of kinematic viscosity, ν (the Greek letter "nu"):

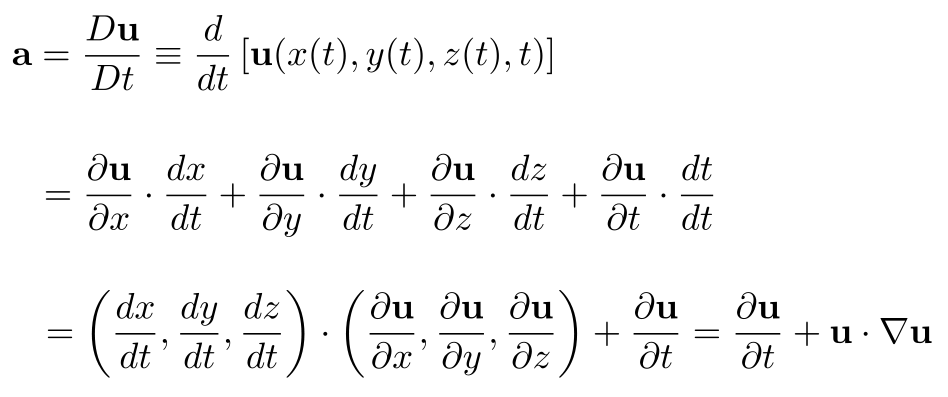

Unsteadiness & Advection

Now, it may seem like we're done, but to actually compute fluid flow, we need fluid velocity, not acceleration. Well, that's easy, isn't acceleration just the time-derivative of velocity? Sure, but there's an important detail relating a fluid particle's acceleration to its velocity that we need to handle: the concept of advection. In a nutshell, advection just means "moving" and carrying some quantity with you as you move. The term actually has a more specific meaning in fluid dynamics and vector calculus. Dr. Bridson and Dr. Müller-Fischer's course notes and Dr. Matthew Juniper's fluid dynamics notes both explain this really well. But here's a brief description.

The temperature at any point in Earth's atmosphere can change for many reasons. On a sunny day, solar radiation from the Sun can get absorbed by the ground and then transferred to the air above it, which then increases the temperature of air at any point in that area. But then, a cold front could plow through, cooling the air at any point in that area. These are two very different mechanisms for changing the temperature at any given point in the atmosphere. The first is an external heat source causing a general increase in the temperature of all air parcels in some area, even if none of the air is moving. The second is the air itself, carrying cooler temperatures with it and pushing the warmer air out of the way. This second mechanism is called advection: it's when cooler air displaces warmer air, changing the temperature at a fixed location in space simply by air moving around. The first mechanism is called unsteadiness--a general change in the temperature field that occurs even if no air ever moved anywhere. Unsteadiness is your shirt changing color due to the lighting around you changing, while you sit perfectly still. Advection is you wearing a bright red shirt and changing the spatial distribution of redness in the world by running around with that bright attire. Unsteadiness is change of a quantity (like temperature or color) at a fixed point in space without motion; advection is change of that quantity at a fixed point in space solely due to motion.

Well, any field can technically be advected, including the fluid velocity itself! You can imagine that if the water in a lake was sitting perfectly still, a boat's propeller could start spinning and push the water to change its velocity at any specific point around the propeller. This is "unsteadiness" since the fluid velocity at a specific point changed due to some external velocity-changing mechanism (the propeller in this case). On the other hand, if some fluid particles already were moving faster than others, then at a specific point in space, the overall flow of the water would carry faster particles into some areas and slower particles into other areas. This is advection: a change in the water velocity at a given point in space due simply to the fluid's own motion carrying fluid particles of various speeds around, and not because some external agent was accelerating the fluid at that point.

Mathematically, we can start by just saying, acceleration is the time-derivative of the velocity of the fluid. Since fluid velocity is a function of x, y, z, and t, and since the first three of these variables are also functions of time (i.e., the coordinates of a moving fluid particle), we apply the chain rule to get that the overall derivative of fluid velocity, often referred to as the total or material derivative, Du/Dt, is:

These terms are precisely what we talked about above: the first term, ∂u/∂t, is the unsteadiness term describing local changes to the fluid velocity at any point independent of the fluid's motion and the second term, u · ∇u, is the advection term, describing the change of the fluid's velocity at any given point due solely to the fluid's motion i.e., the fluid velocity, i.e., the instantaneous change to the fluid particle's position, (x, y, z).

Final Form of the Navier-Stokes Momentum Equation

Replacing a in our most recent version of the Navier-Stokes momentum equation with this unsteadiness term plus advection term gives us the final form of the Navier-Stokes momentum equation we'll use for simulation:

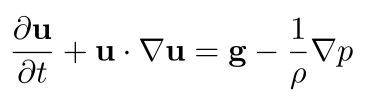

In computer graphics, it's common to assume zero viscosity, i.e., to remove the viscous force term from the Navier-Stokes momentum equation. That gives us the inviscid approximation to the Navier-Stokes momentum equation, the Euler momentum equation,

In this tutorial, we'll stick with this inviscid approximation of the Navier-Stokes momentum equation.

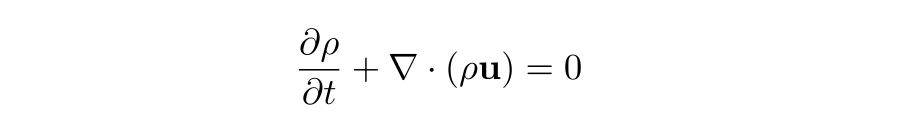

Mass Conservation & Incompressibility

The derivative of the fluid pressure function for a given parcel of fluid (a box-shaped particle) can be approximated to up to its first-order term in its Taylor series as Dr. Matthew Juniper's fluid dynamics notes explain, along with an assumption that fluid mass is conserved within any arbitrary volume of fluid (an application of the law of conservation of mass we stated earlier as a fundamental assumption of physics), to derive an equation called the equation of mass continuity. Alternatively, using the Divergence Theorem, the same equation can be derived:

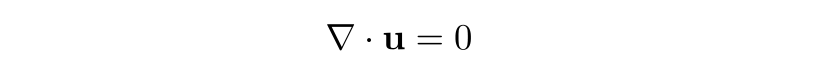

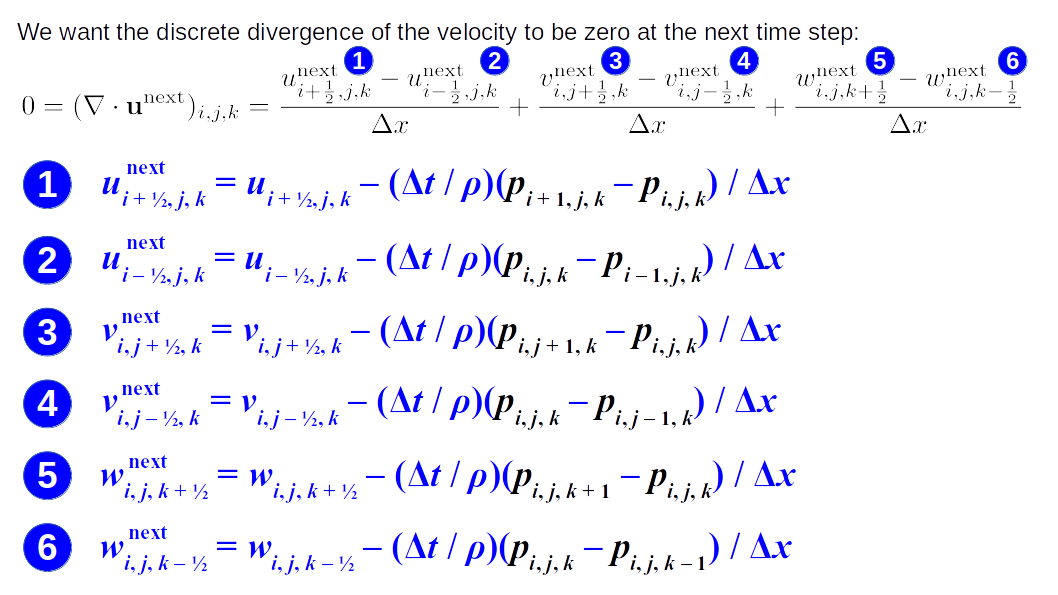

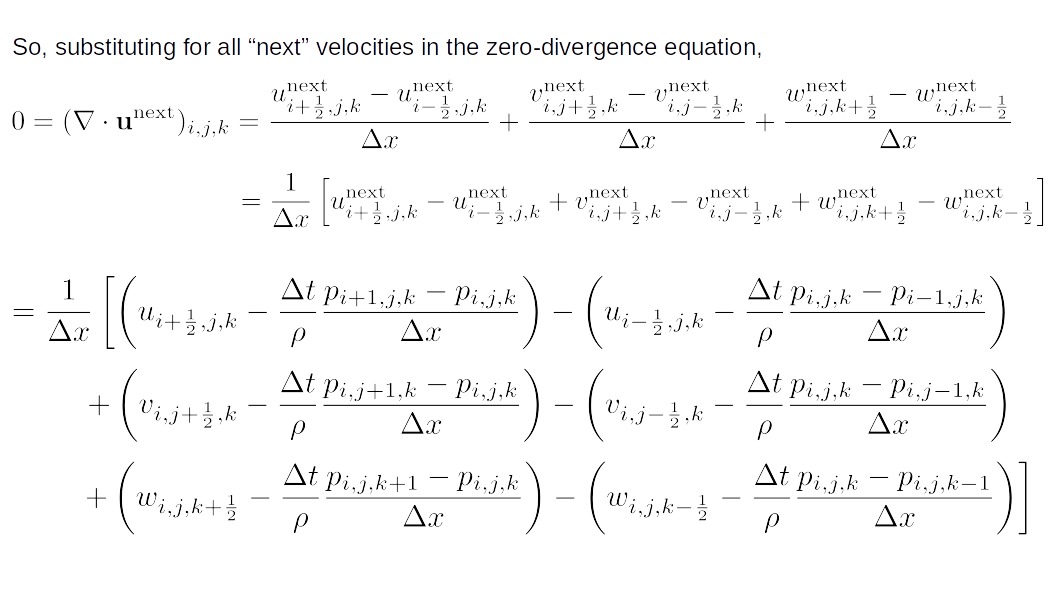

Following the derivation here, assuming the fluid density function is constant within the infinitesimal volume of a fluid parcel that flows with the fluid, i.e., Dρ/Dt = 0, we obtain the incompressibility condition:

The Navier-Stokes momentum equation taken together with the incompressibility condition (which itself is just the law of mass conservation with constant material density) are often simply called the Navier-Stokes equations. In our case, since we're neglecting viscosity, the Euler momentum equation (the inviscid Navier-Stokes momentum equation) together with the incompressibility condition are called the Euler equations.

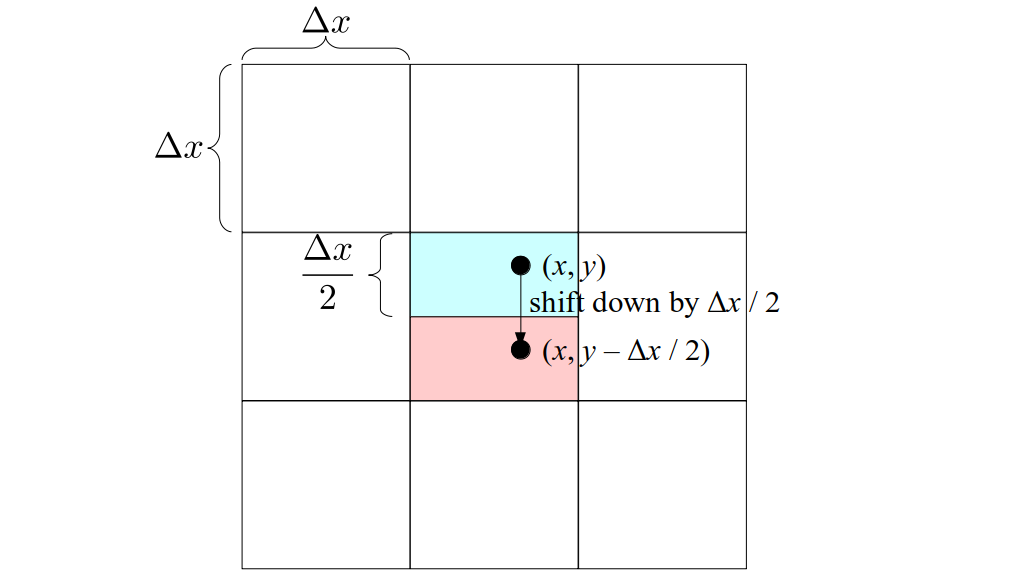

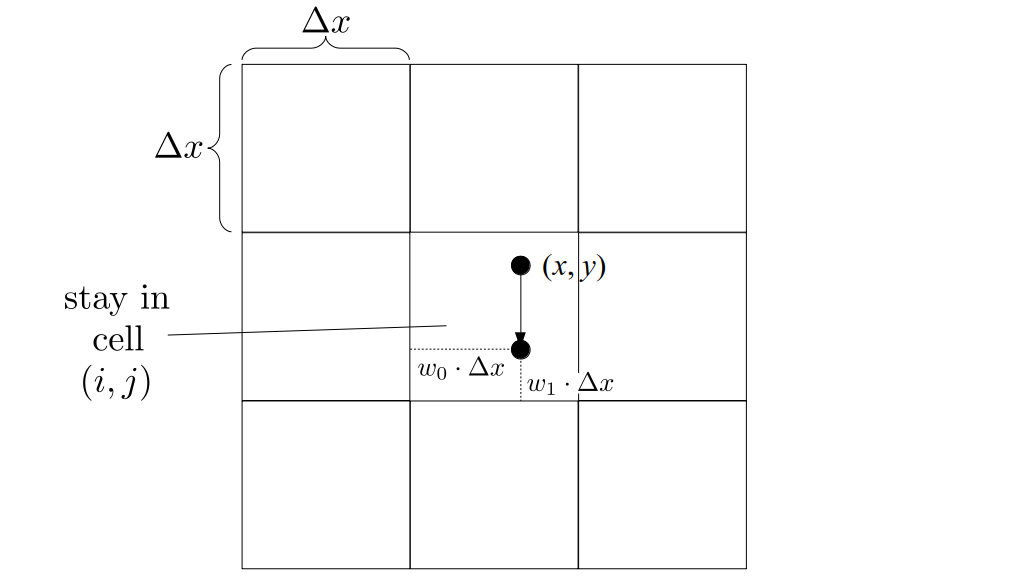

Splitting

As described in Bridson and Müller-Fischer's SIGGRAPH 2007 course notes and Rook Bridson's book on fluid simulation, we will use this equation to simulate a fluid by splitting it up into parts and handling each part separately. Overall, our strategy will be to start with an initial fluid velocity field, u(x, y, z, 0), that has zero divergence. We'll then keep computing new velocity fields at successive time steps, u(x, y, z, Δt), u(x, y, z, 2Δt), u(x, y, z, 3Δt), etc. But note that our goal is to make sure all of these velocity fields at each of these time steps have as close to zero divergence as possible.

To do this, we will follow a procedure similar to that in Section 2.2 of Bridson and Müller-Fischer's SIGGRAPH 2007 course notes, with a few changes, such as keeping a constant time step size, Δt. Here is our procedure:

- Initialize the time t = 0.

- Start with a zero-divergence velocity field u(x, y, z, t).

- For the duration of the simulation:

- Set u = Advect(u(x, y, z, t), Δt).

- Set u += Δtg.

- Set u = ProjectPressure(u, Δt) such that u has approximately zero divergence.

- Set t += Δt.

These steps are just adding the advection term, gravity term, and pressure gradient term to the unsteadiness term, i.e., the partial derivative of the fluid velocity with respect to time, in the inviscid Euler momentum equation.

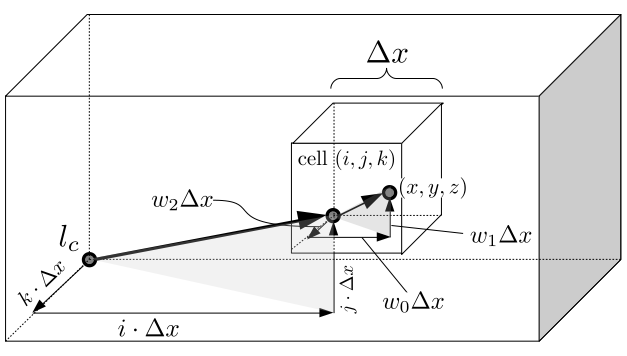

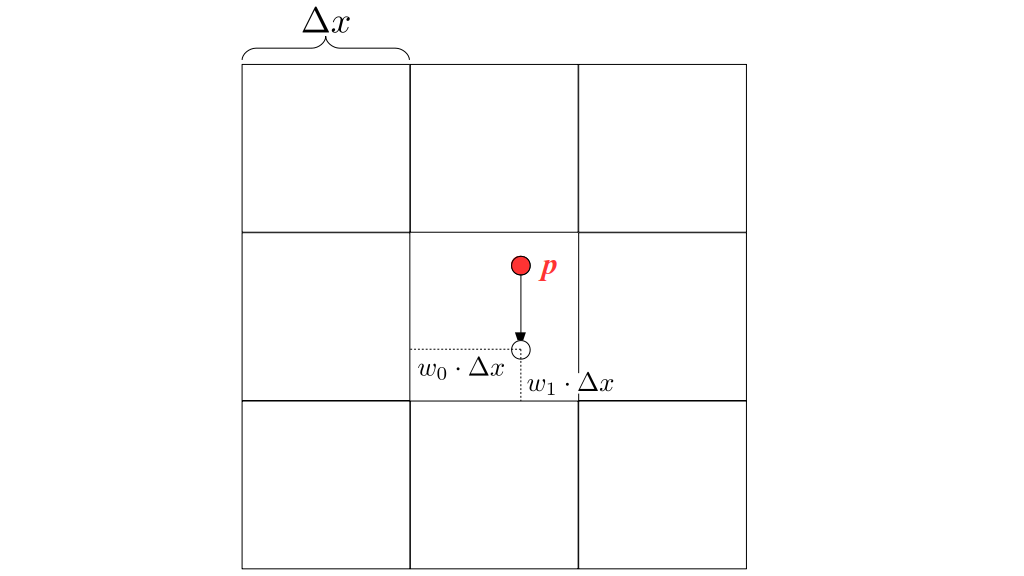

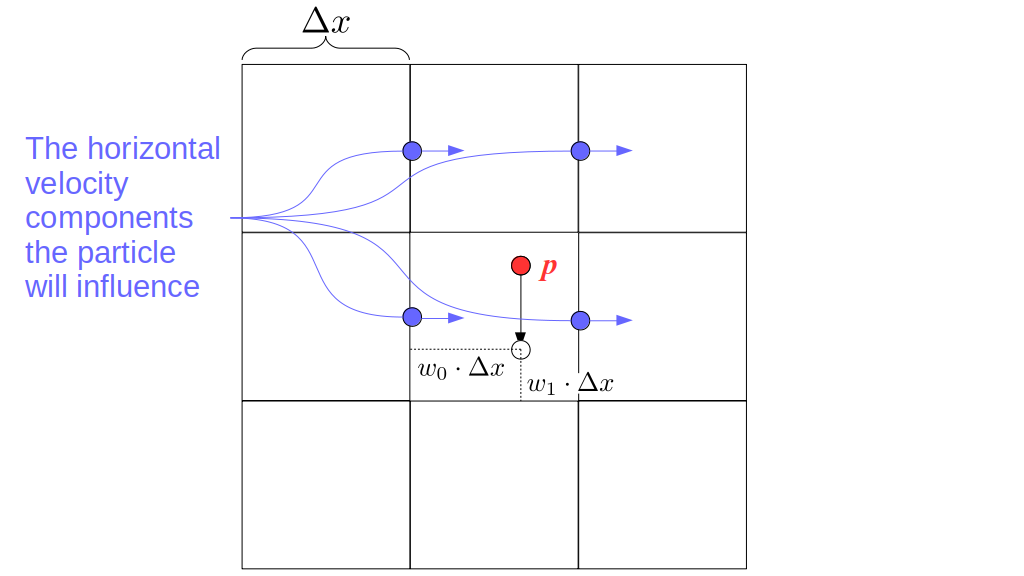

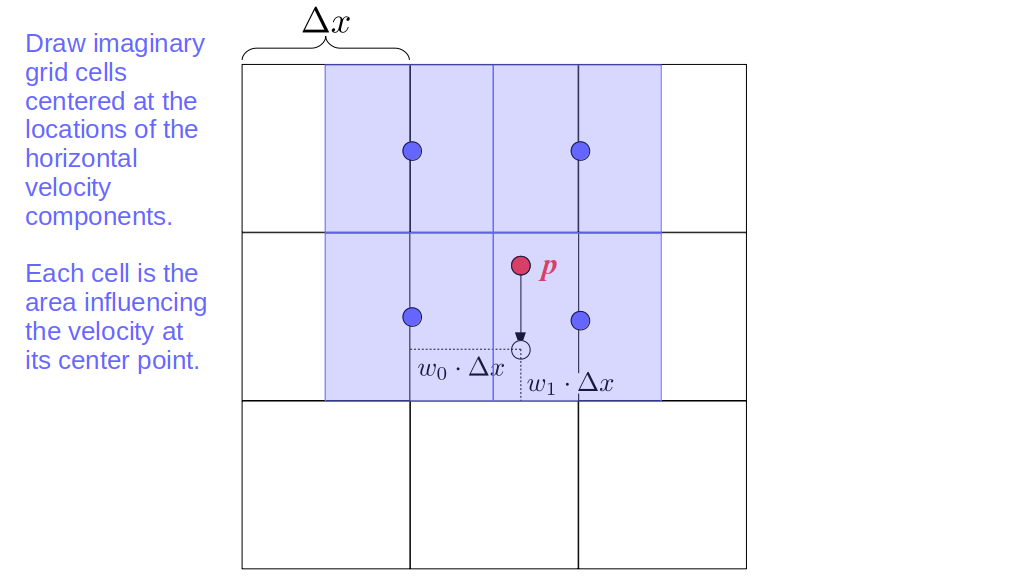

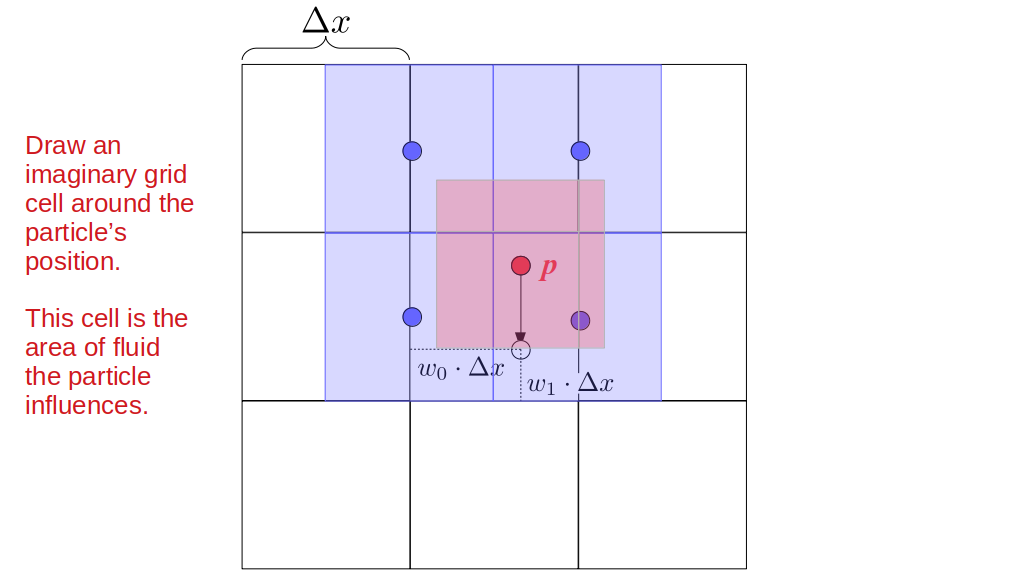

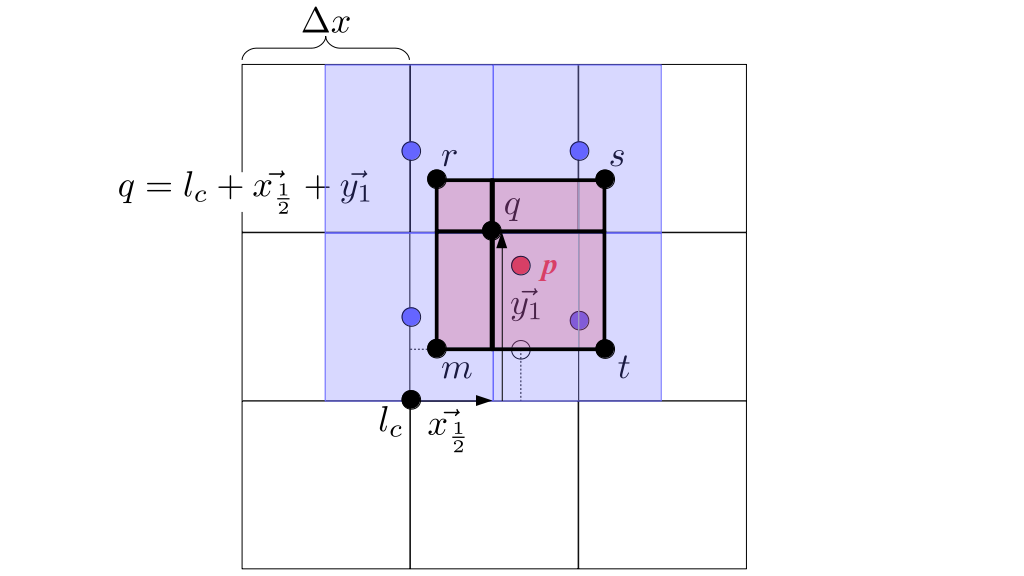

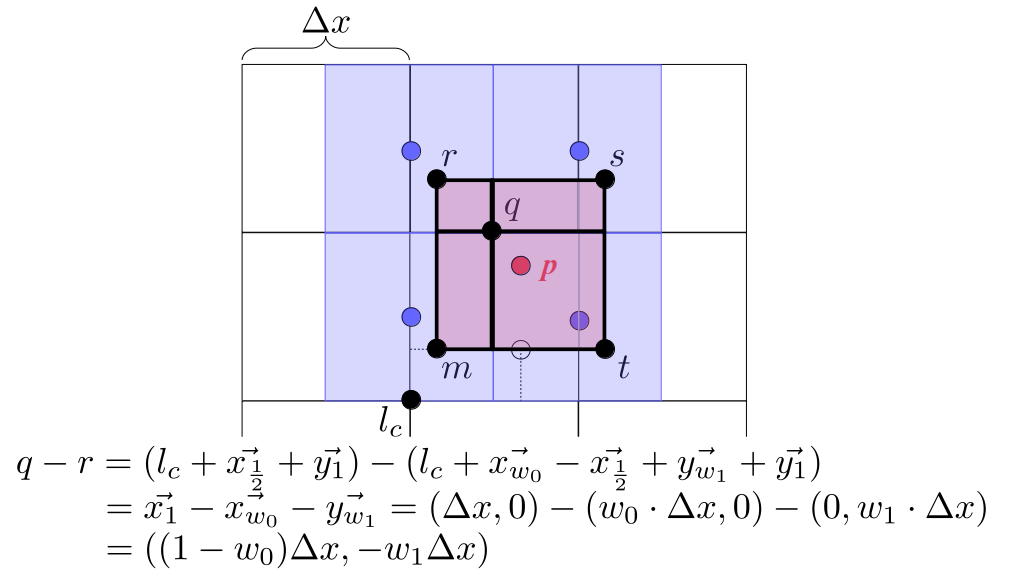

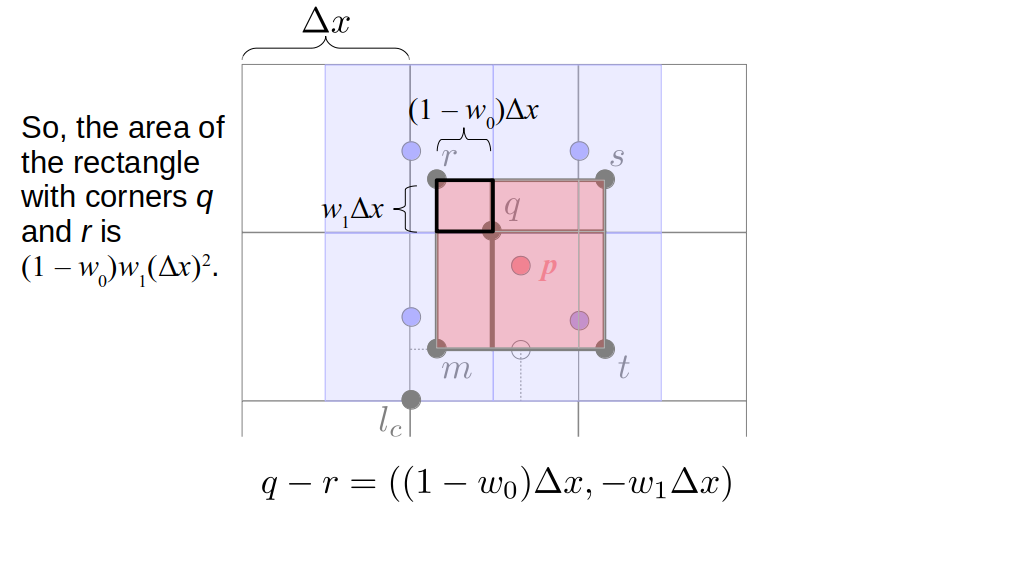

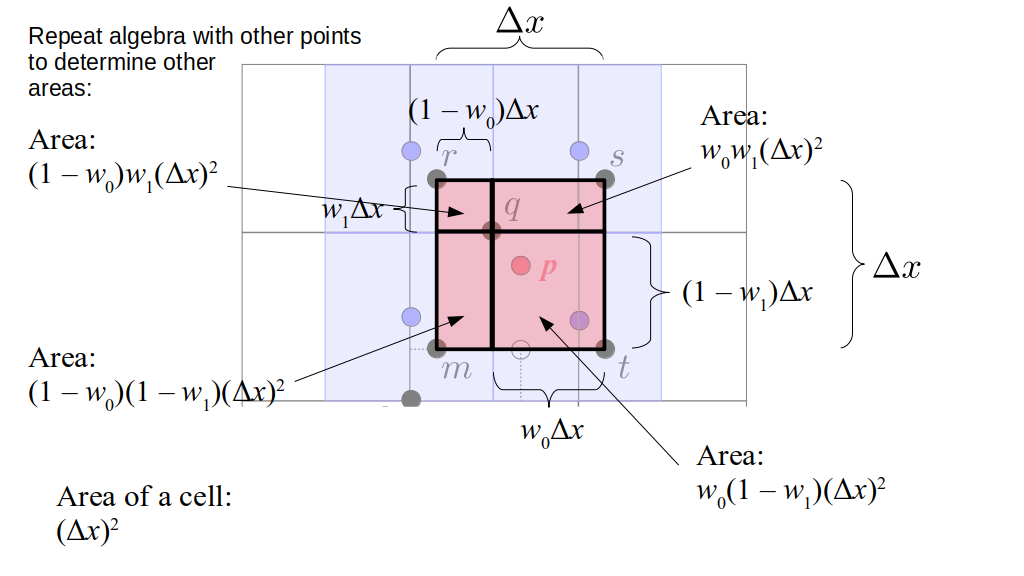

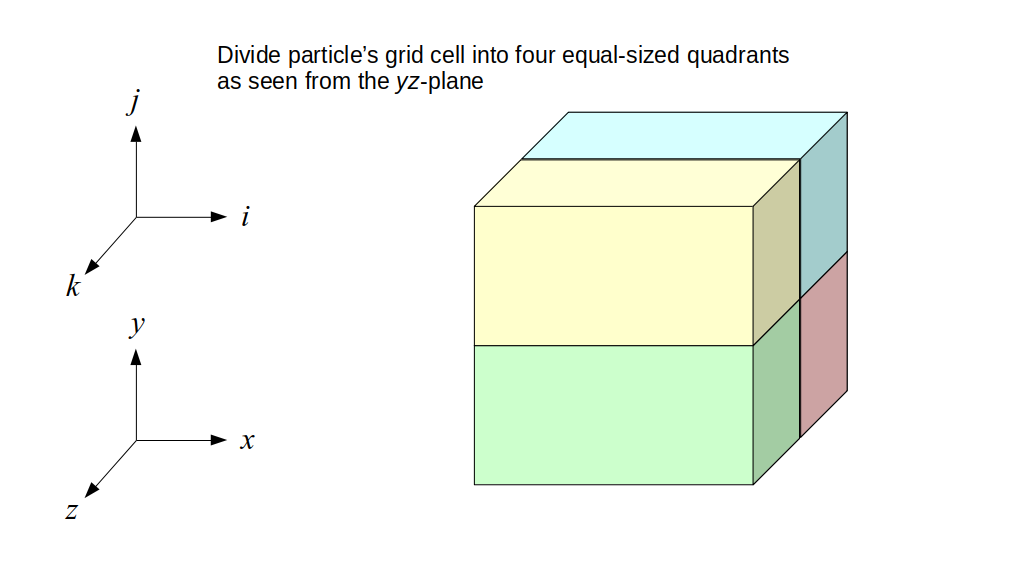

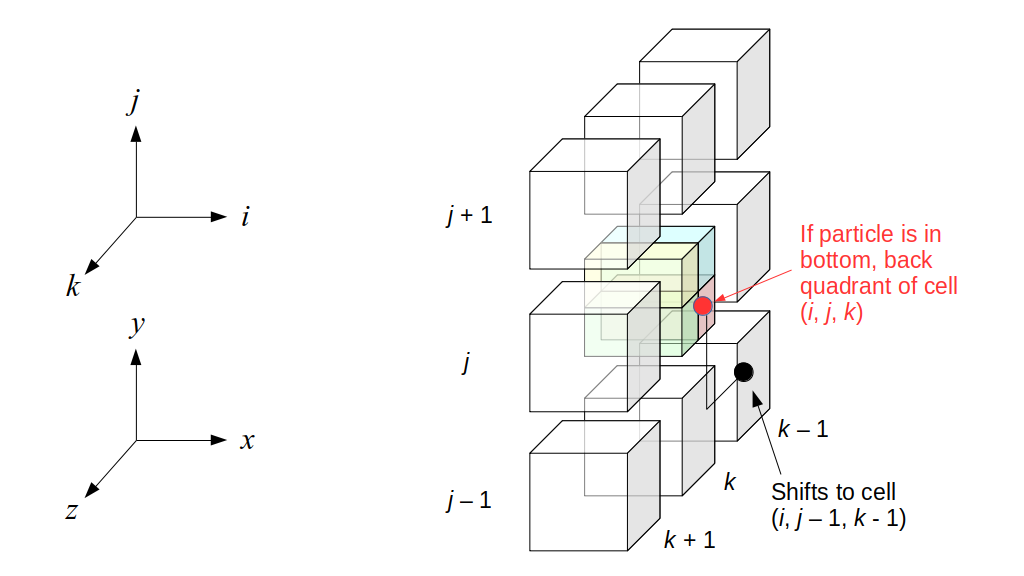

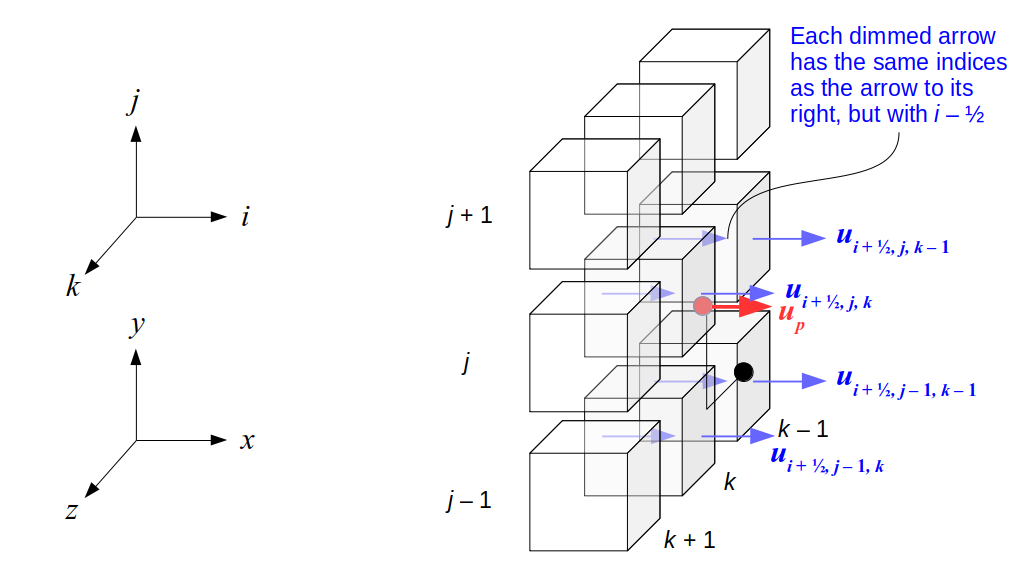

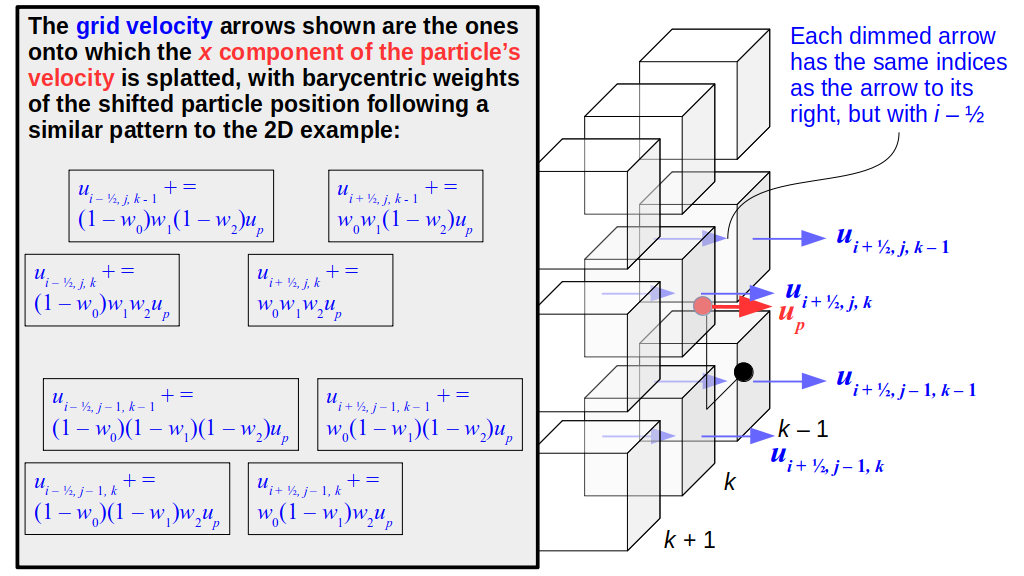

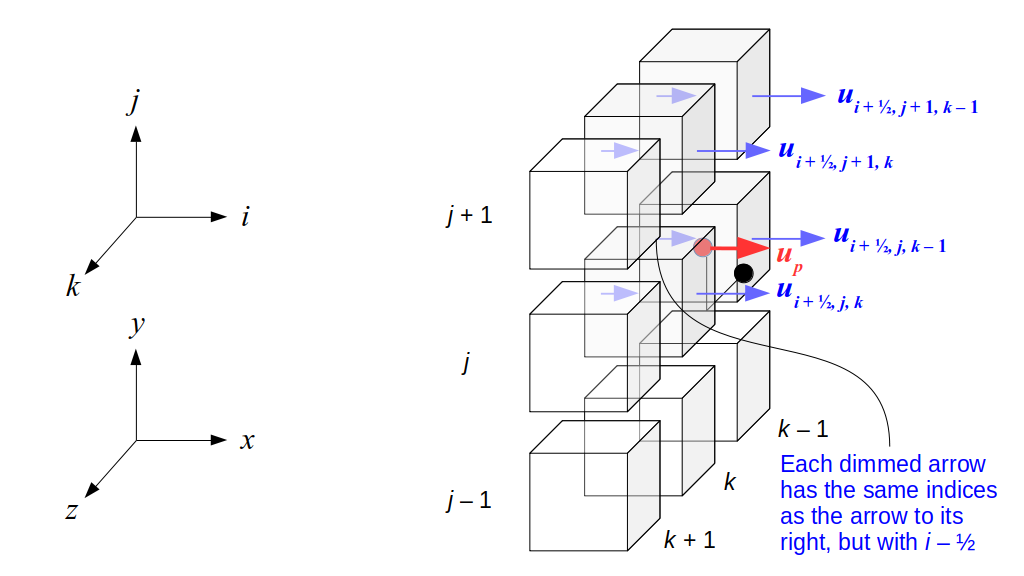

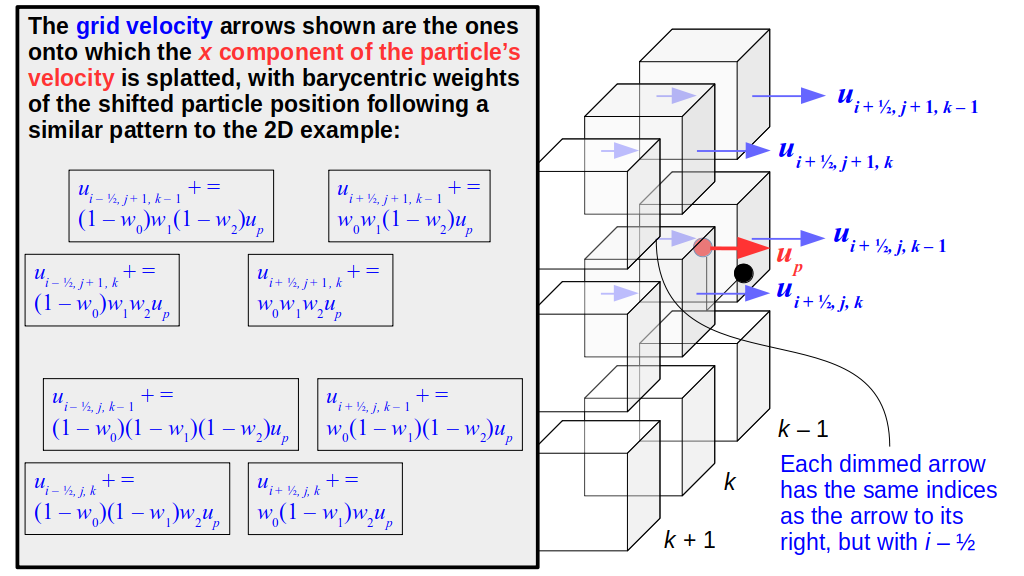

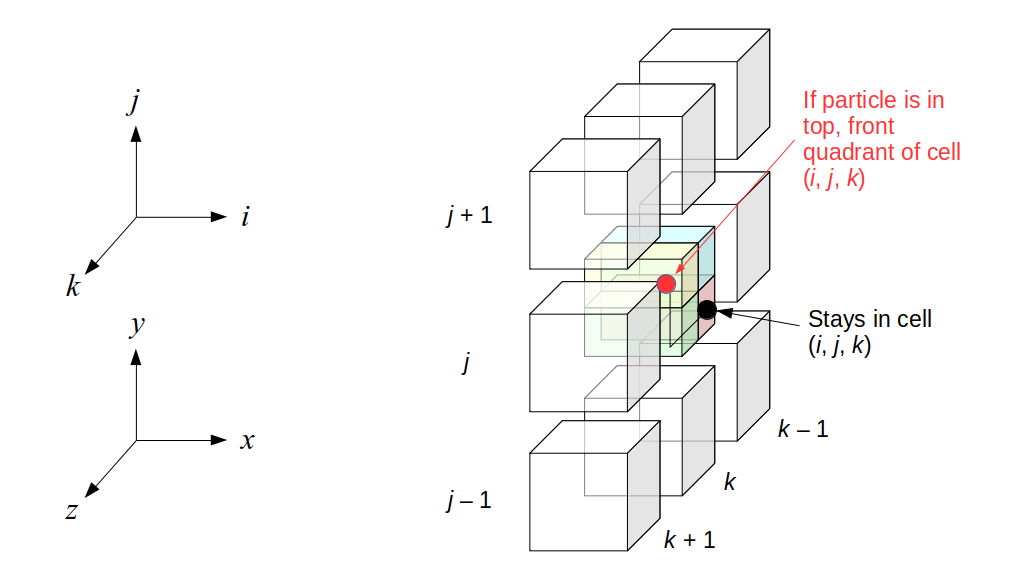

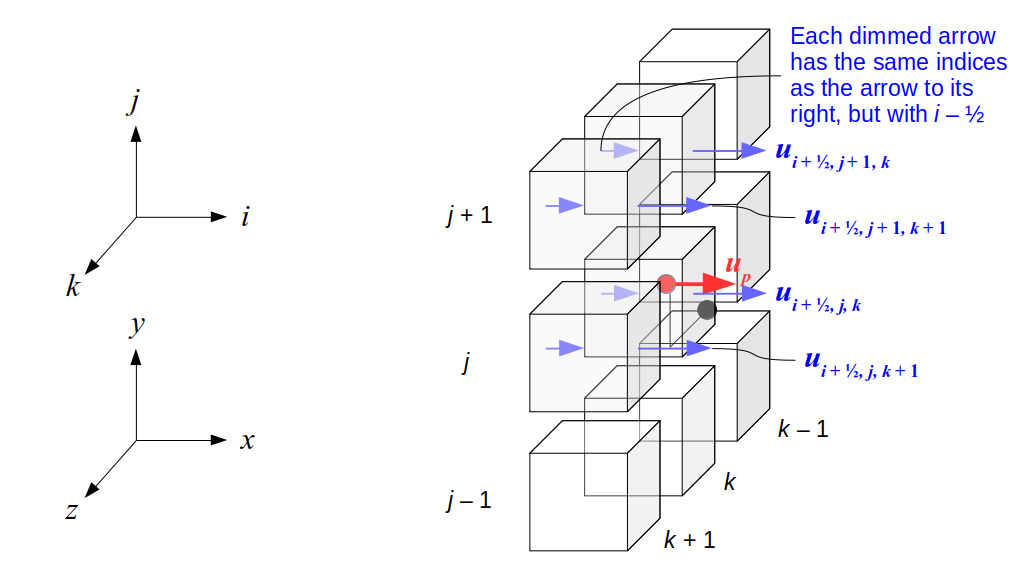

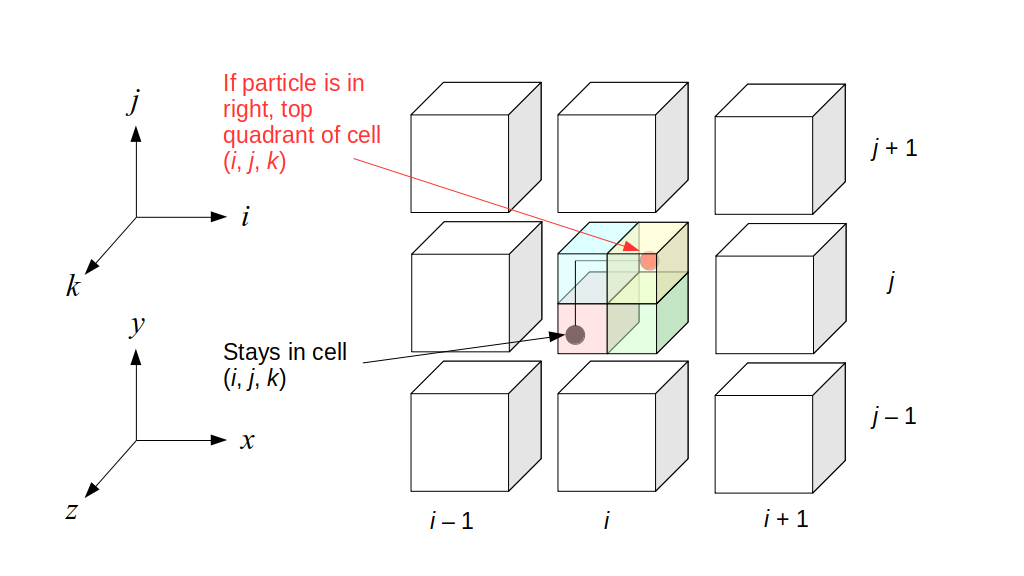

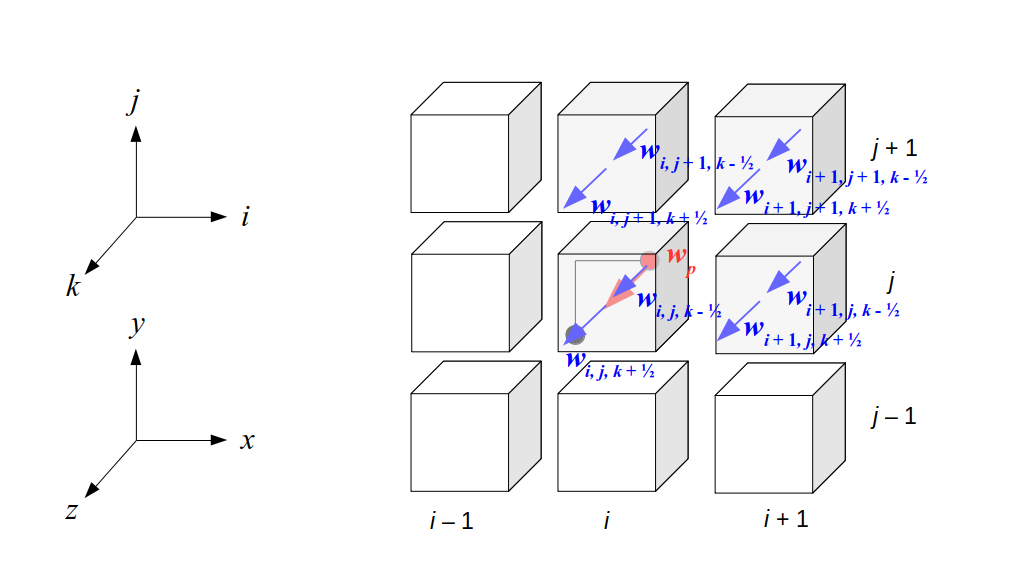

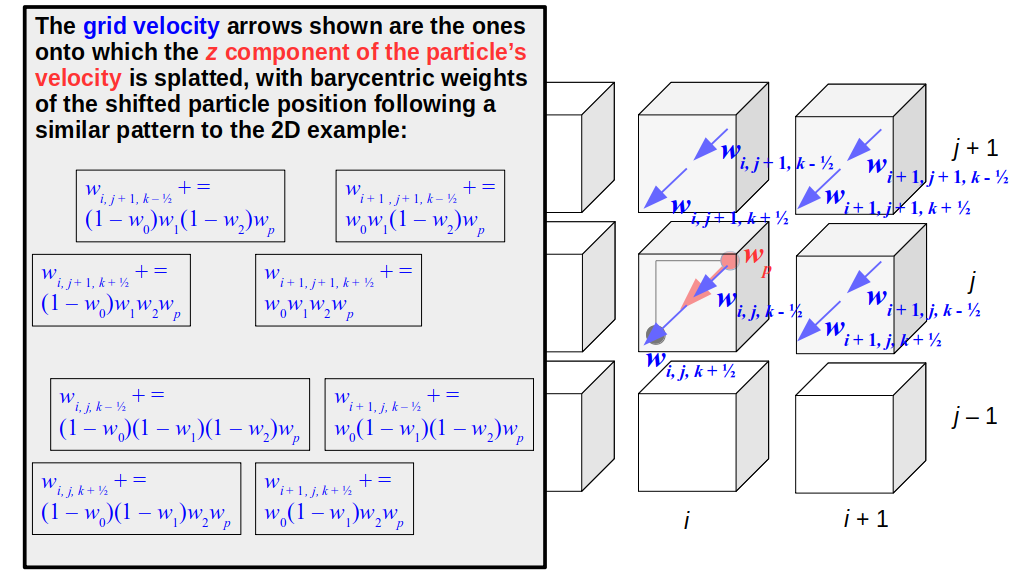

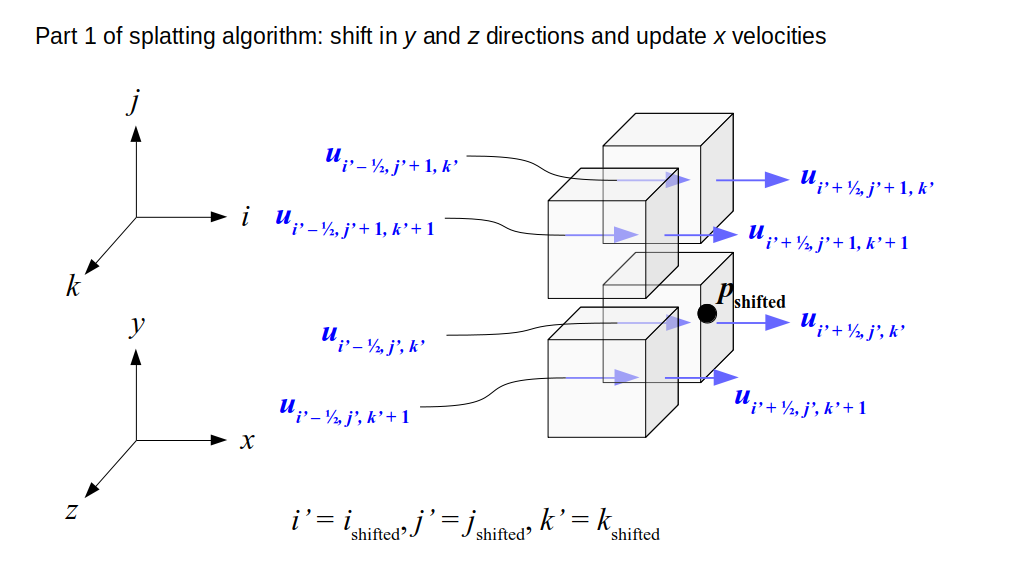

The next several sections will build up a complete implementation of a fluid simulator. First, we'll describe a data structure that stores the scalar and vector fields like pressure, density, and velocity in a digital form as a 3D staggered grid of data values covering a region of space containing our fluid(s) of interest. Then we'll explain how we exchange data from this grid to individual particles that carry position and velocity information with them. We'll then apply the steps above to contribute the effects of advection, gravity, and pressure to the fluid velocity values stored by our particles. We'll then show how we map particle data back to the staggered grid. This process will be repeated in every time step until we reach the end time of our simulation.

The Staggered Grid

Fluid simulations involve a data structure called the staggered grid or the marker-and-cell (MAC) grid. The staggered grid separates points where we sample each of the four following values: fluid pressure and the x, y, and z components of fluid velocity. This will help us calculate derivatives of pressure and velocity for physics-based simulations of fluids. See Rook Bridson's excellent book on fluid simulation for details on the staggered grid data structure. An explanation of the staggered or MAC grid is in Section 2.4 of Bridson and Müller-Fischer's SIGGRAPH 2007 course notes.

Staggered Grid Structure

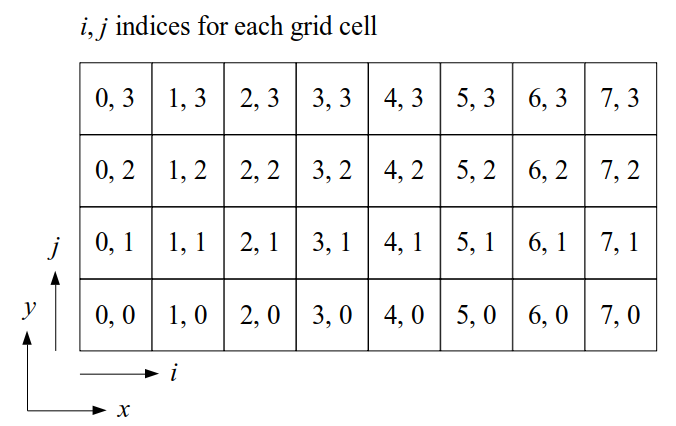

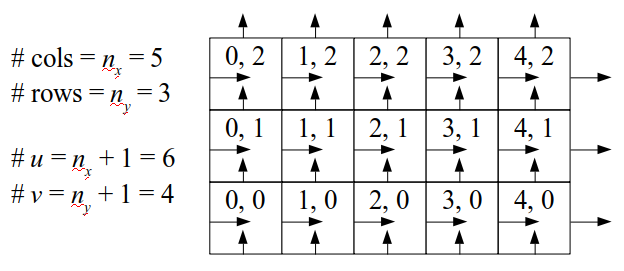

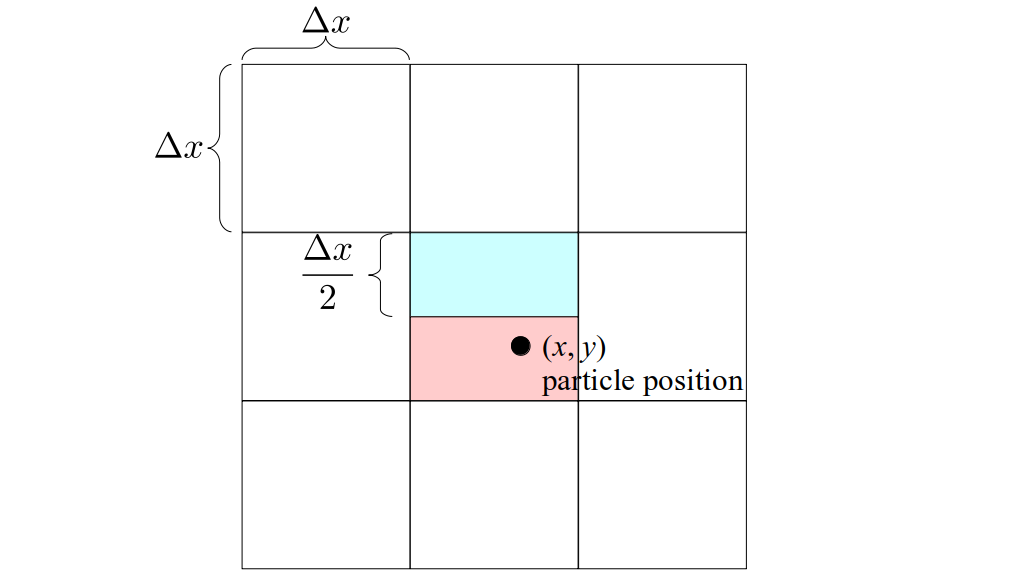

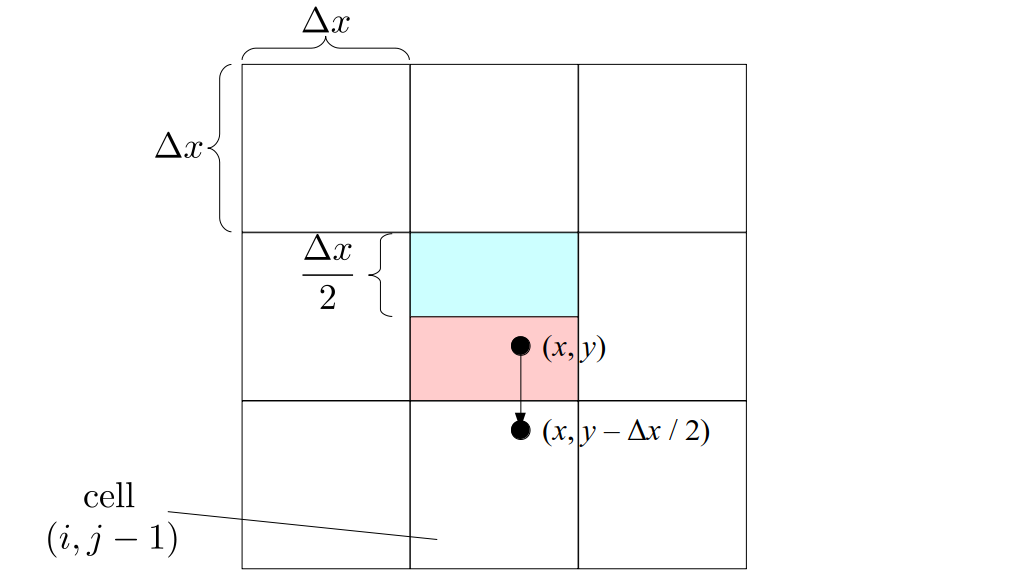

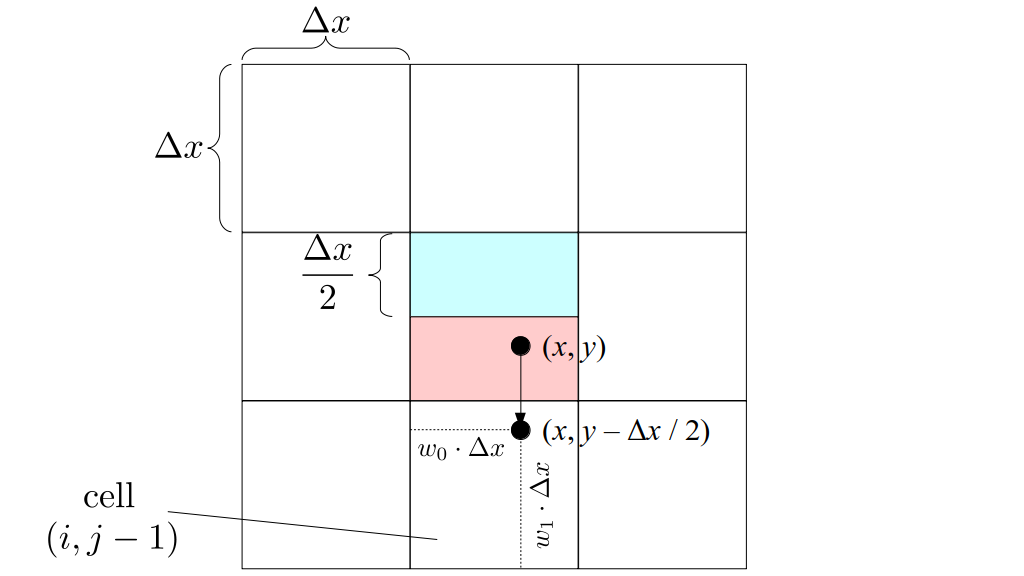

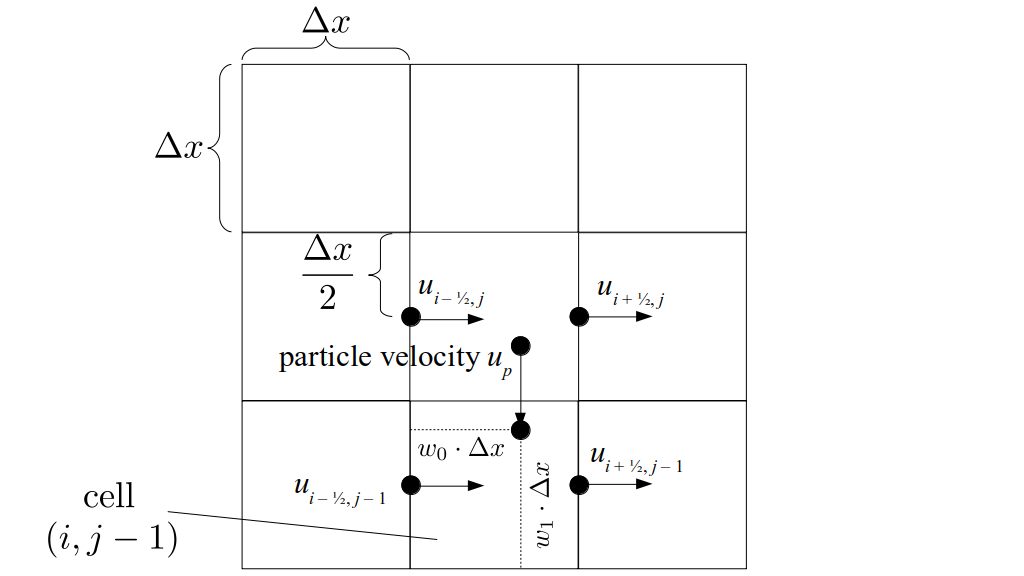

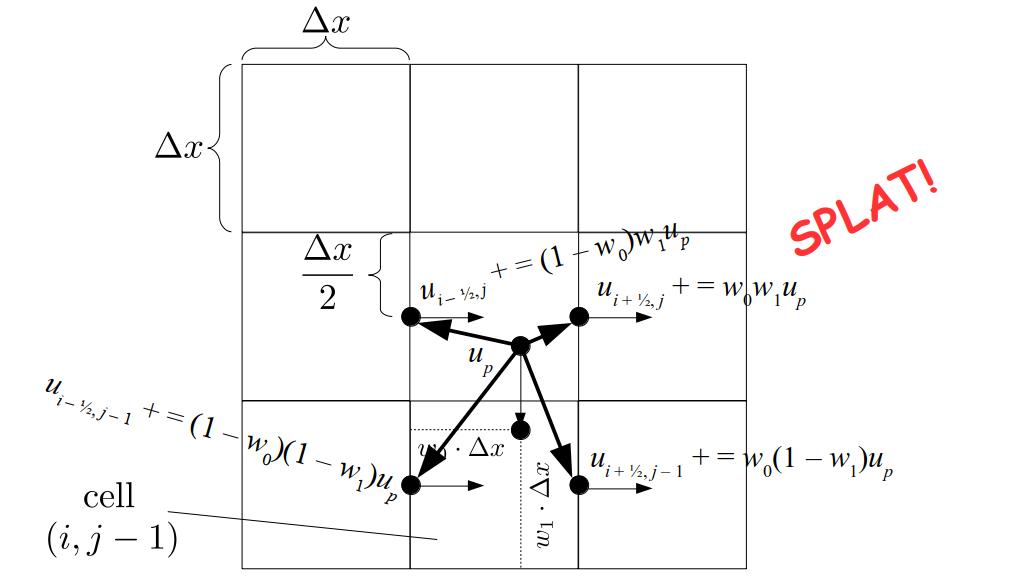

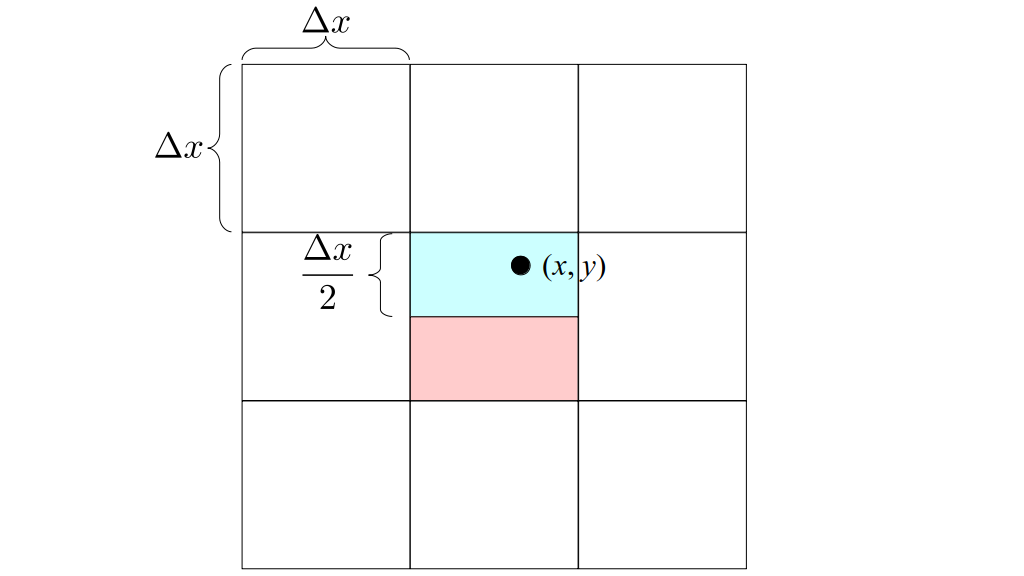

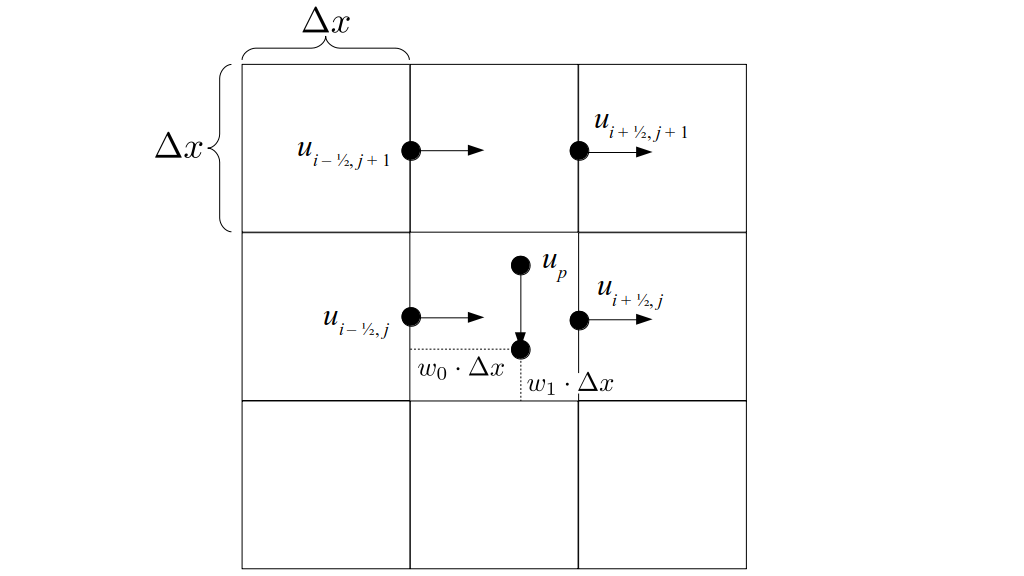

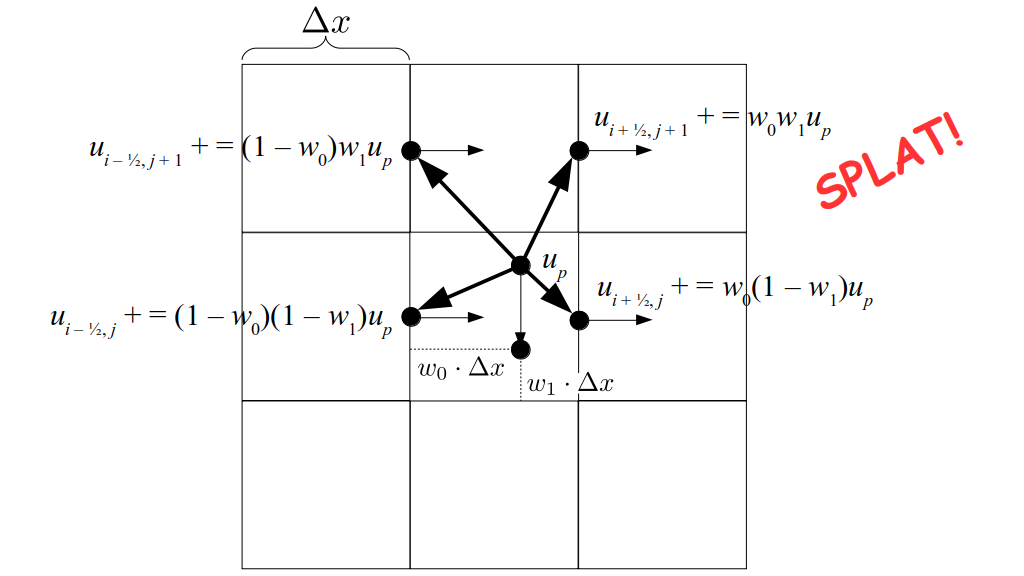

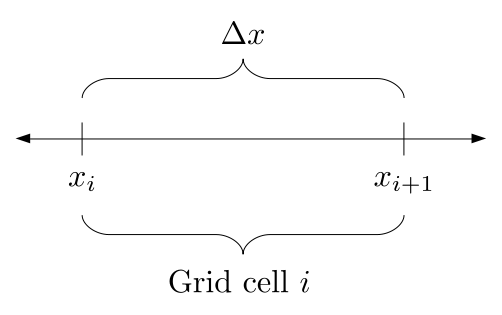

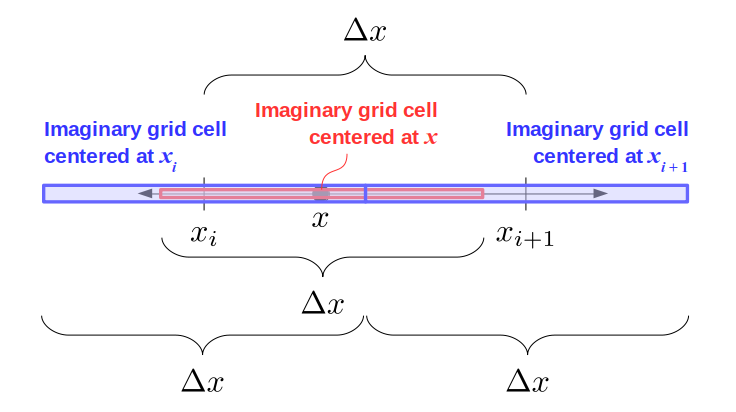

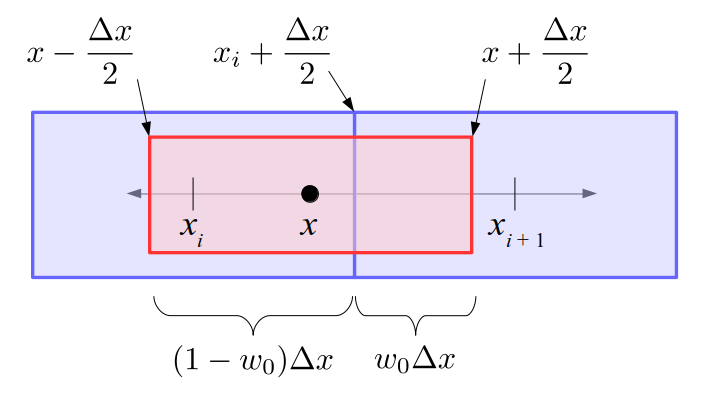

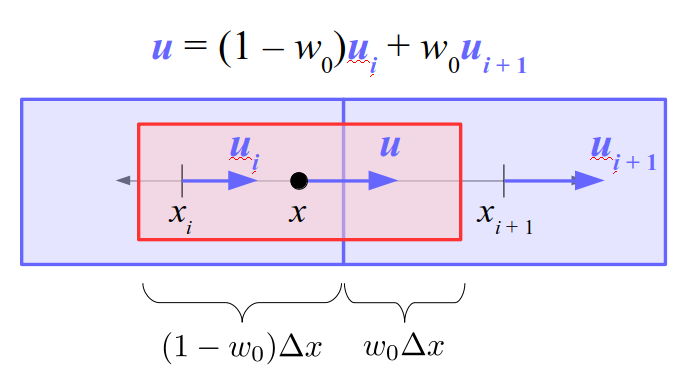

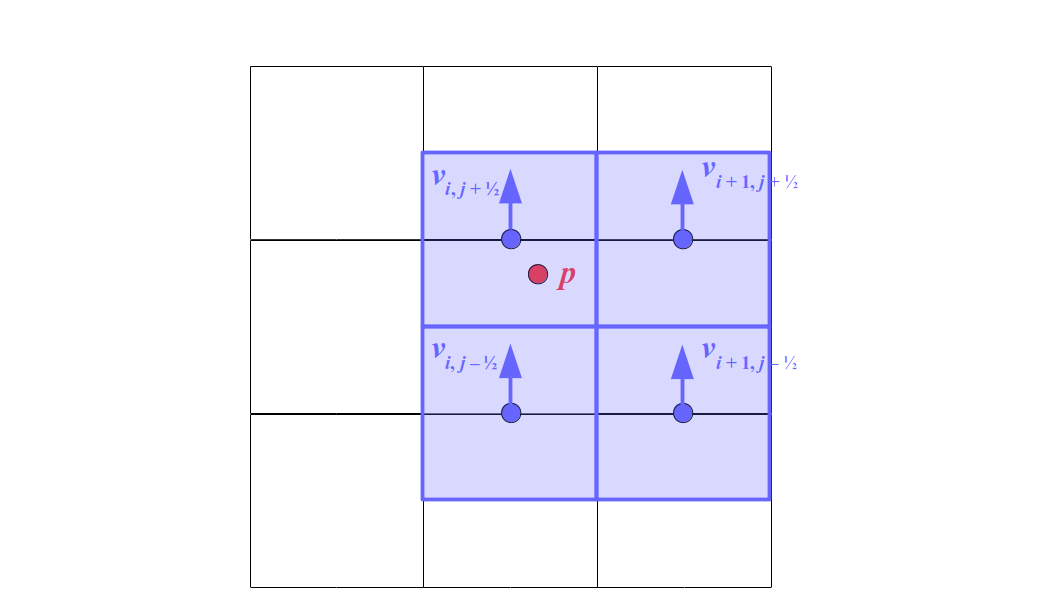

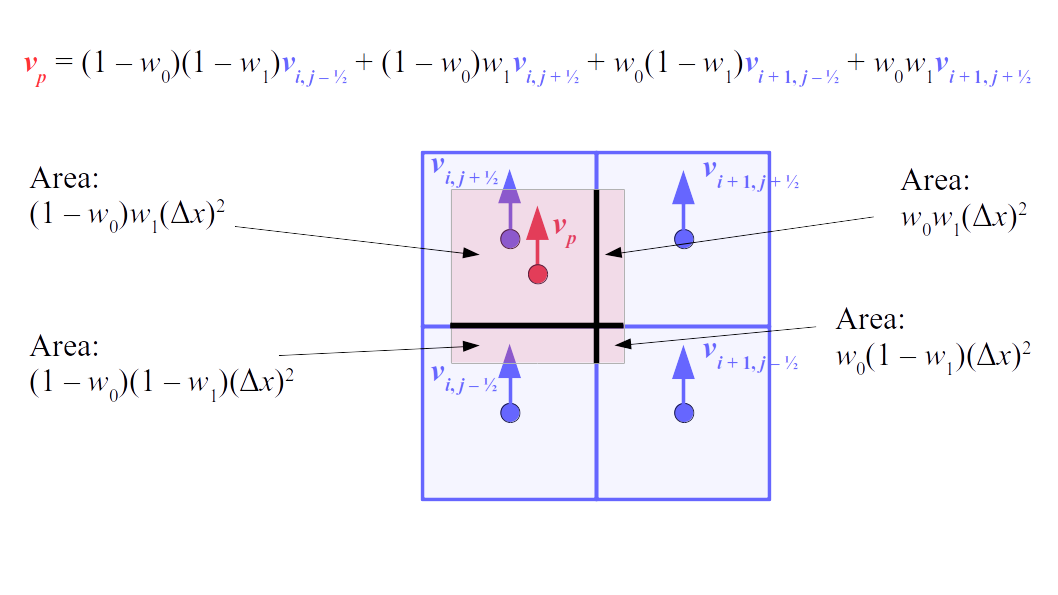

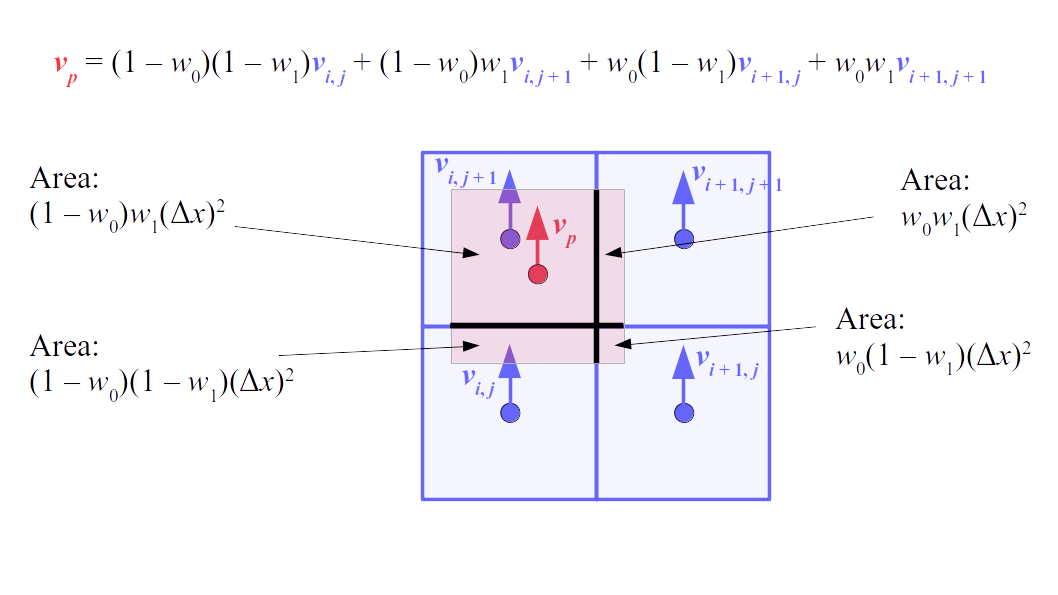

The staggered grid is a 2D or 3D grid representing the full spatial volume within which we simulate our fluid(s). We will label each grid cell of a 2D staggered grid with a row (along the x direction) index, i, and a column (along the y direction) index, j:

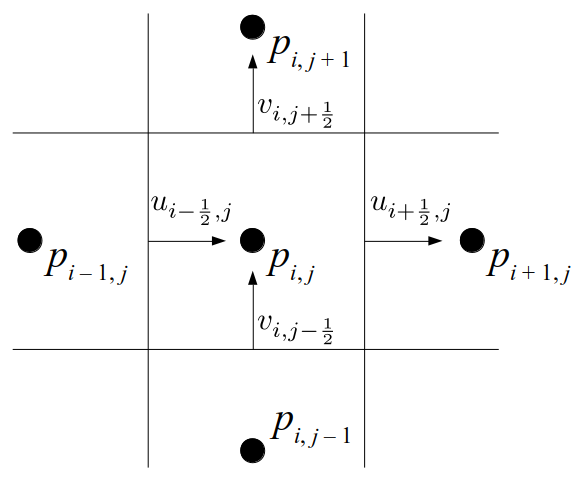

At the center of each grid cell with indices i and j, we will store a nonnegative fluid pressure value, pi, j ∈ R. At the center of each boundary between adjacent grid cells, we will store a component of the fluid velocity that's perpendicular to that boundary. That is, at the center of the boundary between grid cell i, j and grid cell i + 1, j, we store the horizontal component, ui + ½, j ∈ R, of the fluid's velocity at that location. At the center of the boundary between grid cell i, j and grid cell i, j + 1, we store the vertical component, vi, j + ½ ∈ R, of the fluid's velocity at that location:

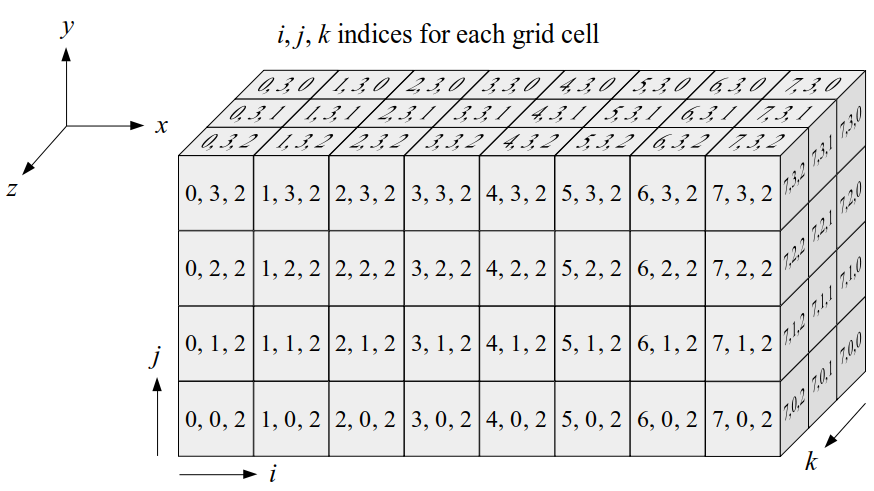

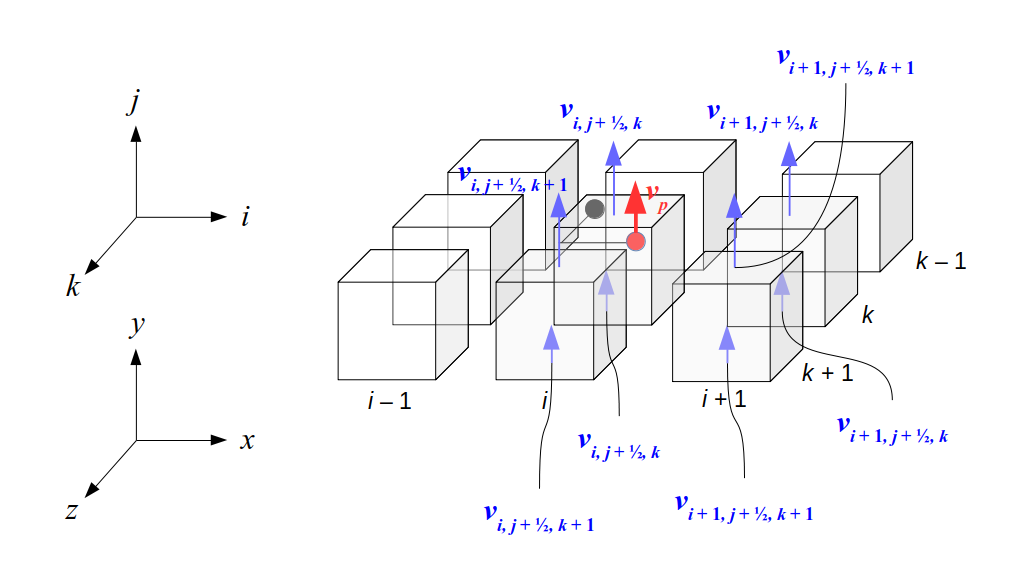

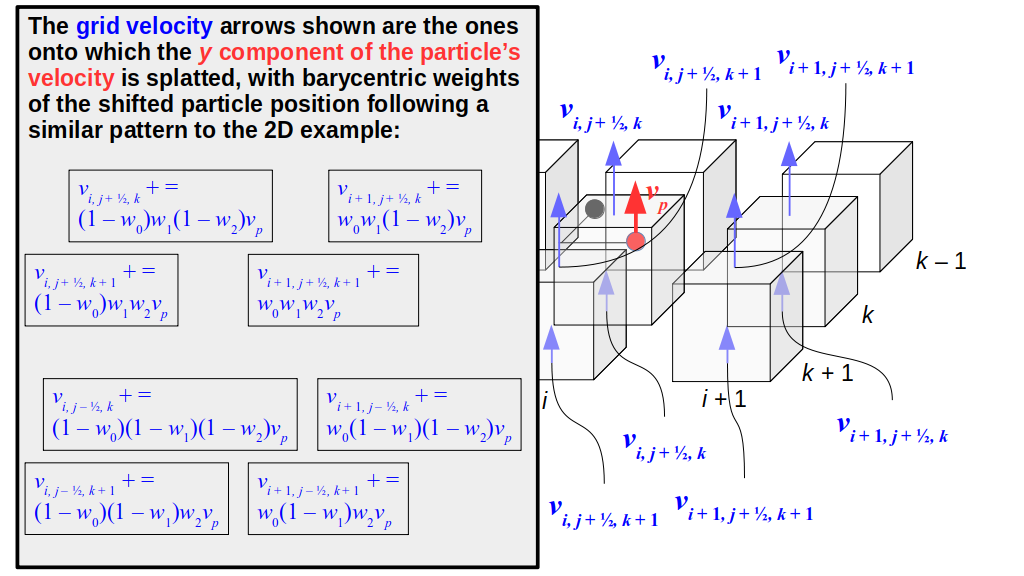

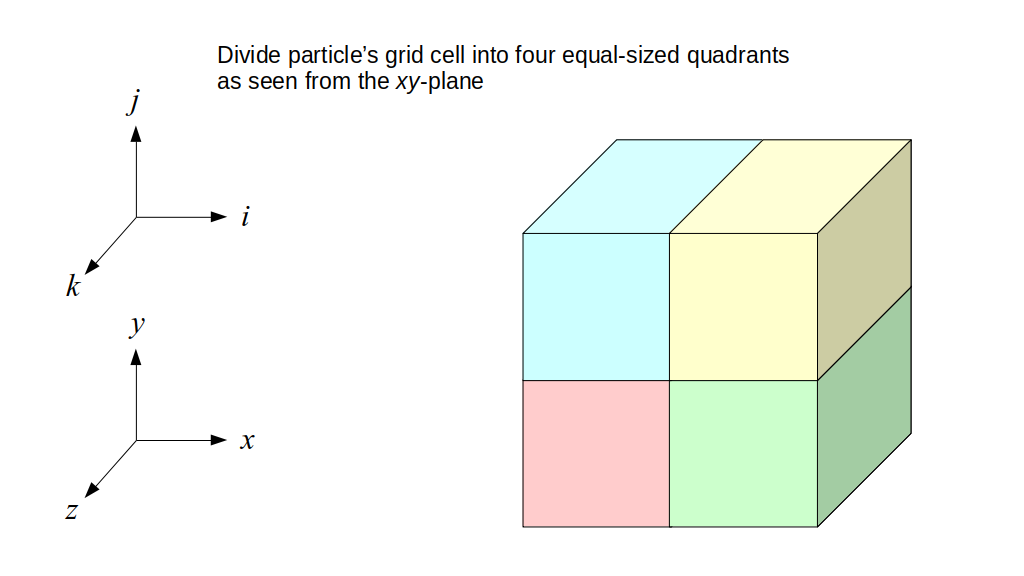

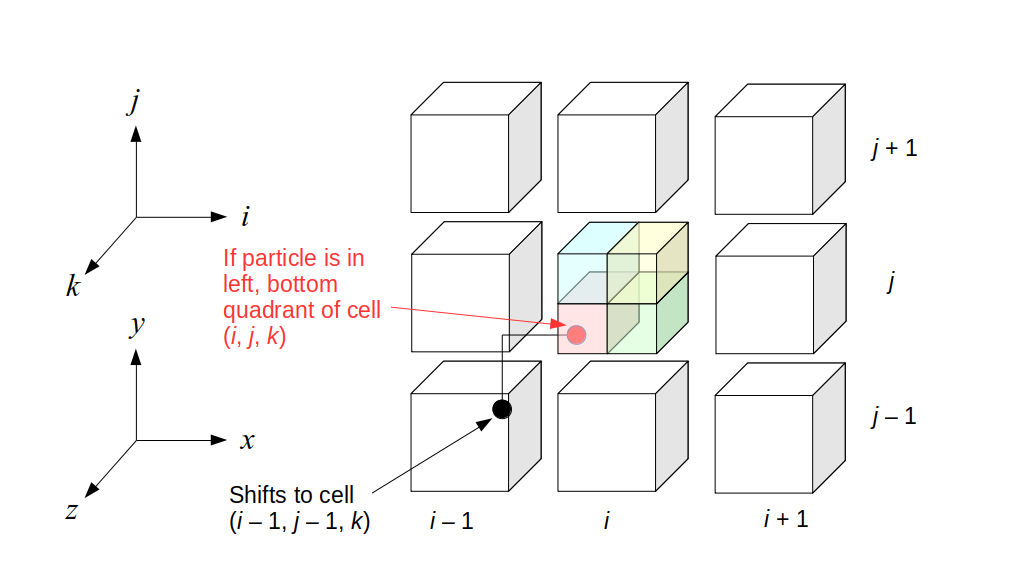

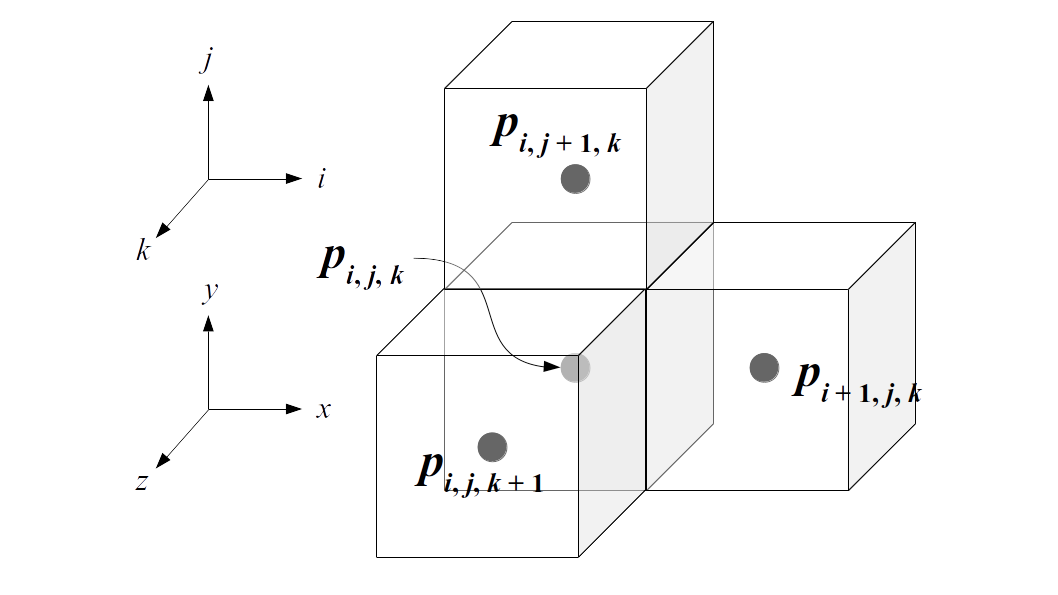

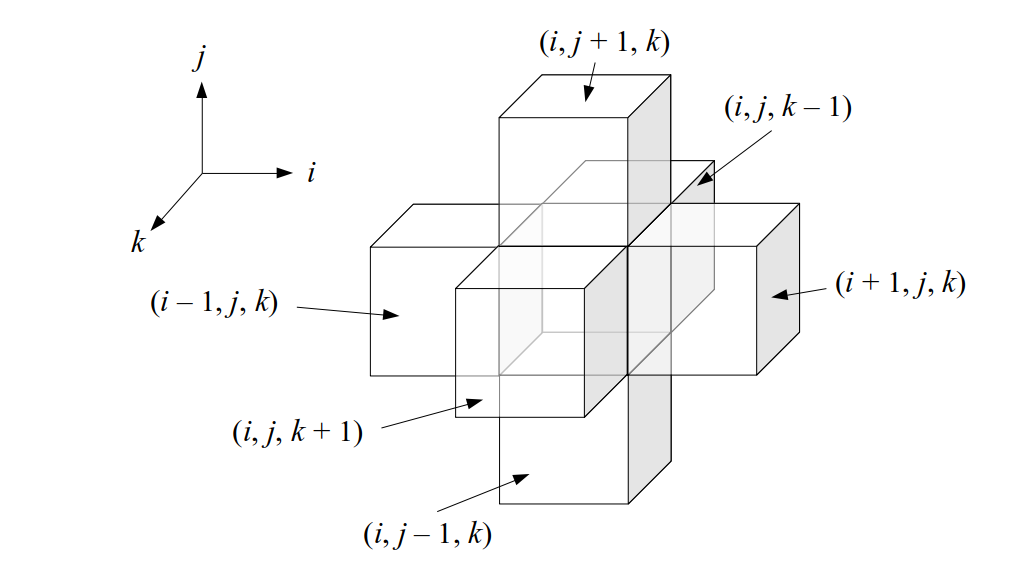

We will label each grid cell of a 3D staggered grid with an index i along the x direction, index j along the y direction, and index k along the z direction:

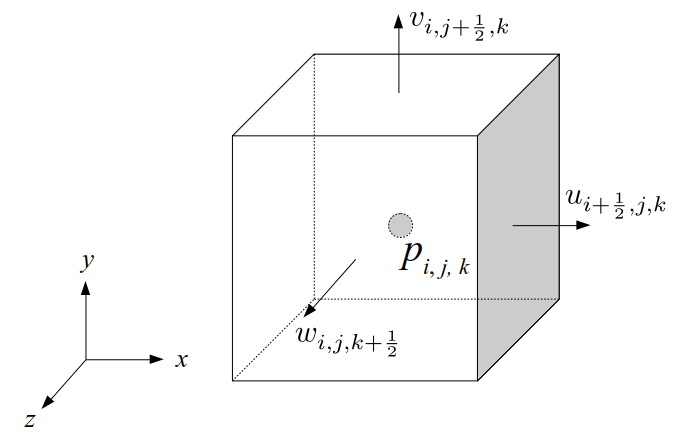

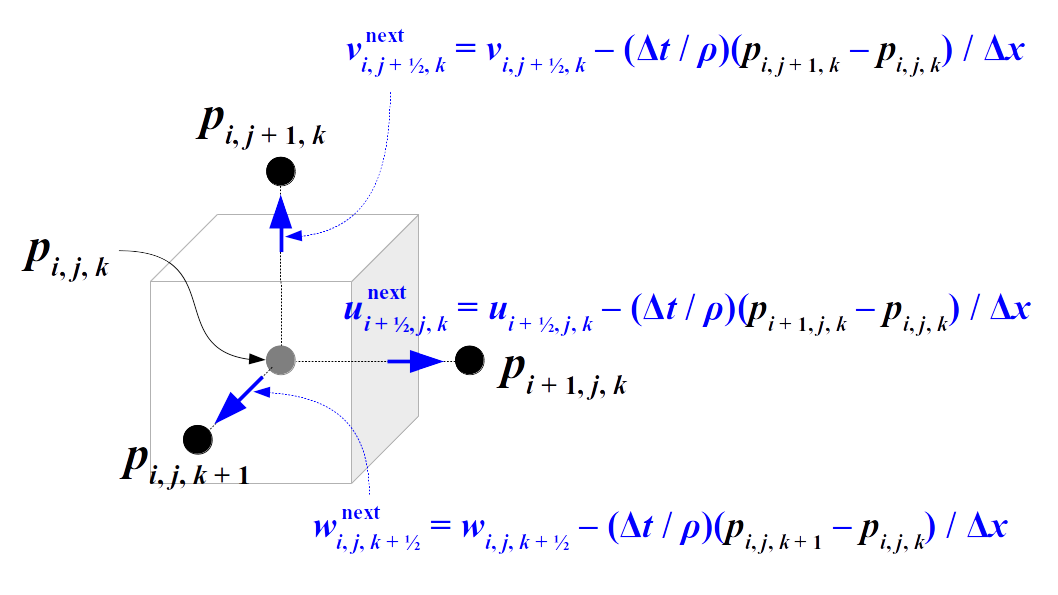

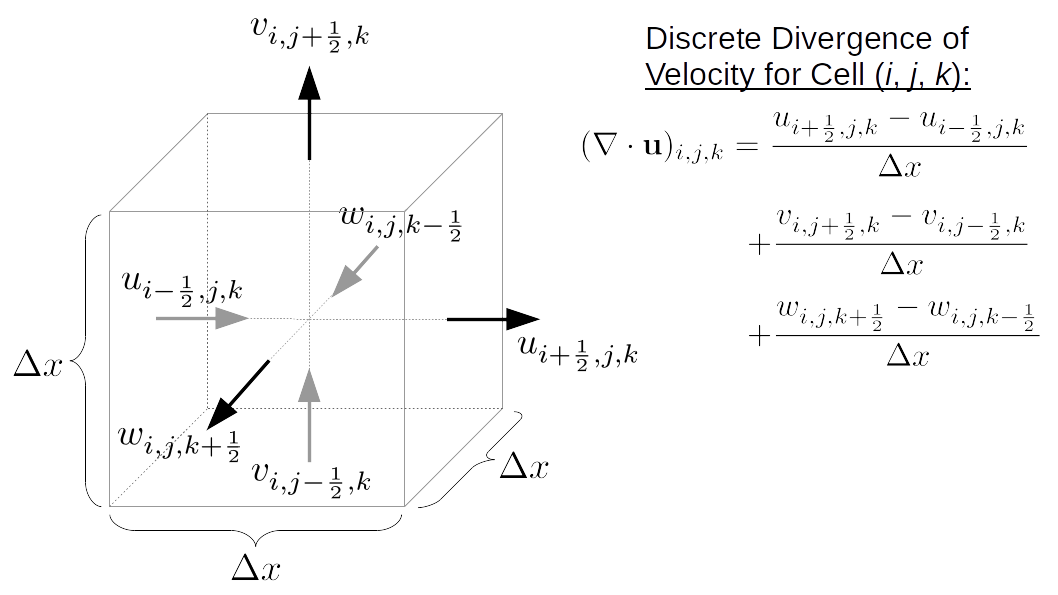

Like the 2D staggered grid, the 3D staggered grid will have a fluid pressure value, pi, j, k, stored at the center of each grid cell with indices i, j, k. At the center of each boundary face between adjacent grid cells, the 3D grid will also have a component of the fluid velocity perpendicular to that boundary:

Note that for both the 2D and 3D staggered grids, the velocity components are not components of a single 2D or 3D velocity vector! Instead, we are storing different components of the fluid velocity at entirely different locations in space.

Storing Staggered Grid Data

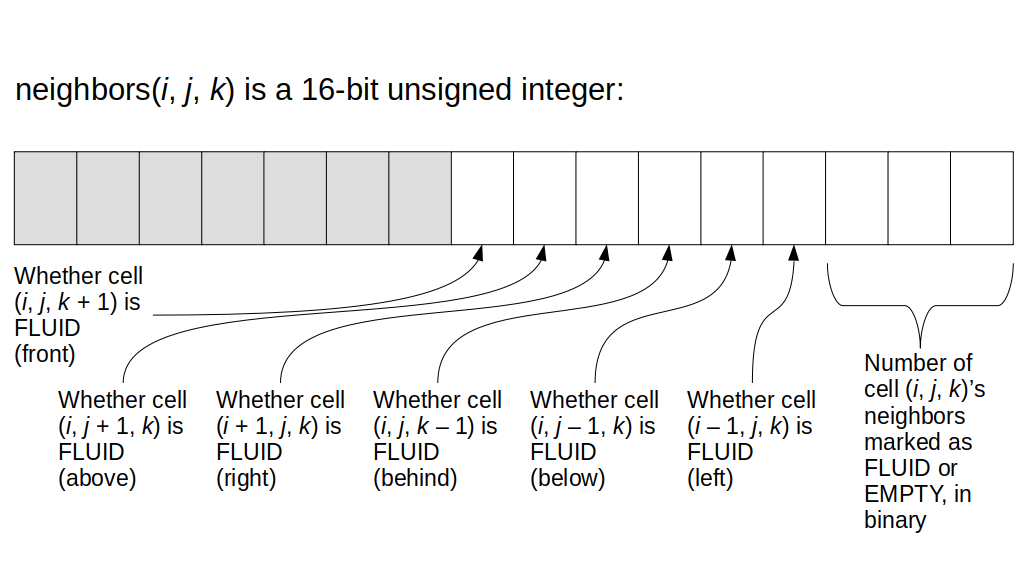

We will focus on implementing the 3D staggered grid, although we'll start with a 2D grid description since it's much easier to understand the main concepts and then extend to 3D than to see the concepts for the first time in 3D. As you can see, we need to store a variety of values in some kind of 3D array. For example, we need to store the fluid pressure for any given grid cell with indices i, j, and k. We also need to store the u, v, and w values, i.e., the x, y, and z components of the fluid velocity at the appropriate grid cell boundaries. We will also need to store non-real-number values, e.g., whether each grid cell contains a solid or fluid or is empty.

We will begin by creating a class, Array3D, based on the class of the same name in Bargteil and Shinar's SIGGRAPH course. The Array3D class will be templated so we can use it to store different types of data, e.g., double values for real numbers like pressure and velocity and other data types for labeling grid cells as containing solid or fluid or being empty. But exactly how large should our 3D array be for each type of value? For example, the velocity components are defined at the grid cell boundaries rather than at the cells themselves and we do this weird indexing of them with "½" thrown in just to make life difficult?! Let's do a quick illustration to make sure we count this properly.

Assuming we store velocities not only between grid cells but really on all faces of grid cells, i.e., including the boundary of the entire grid, we will actually end up storing differently-sized arrays for different quantities. If we define nx as the number of columns of our grid, i.e., i ∈ {0, 1, ..., nx - 1}, and define ny as the number of rows in the grid, i.e., j ∈ {0, 1, ..., ny - 1}, then it's easy to see from the diagram above of a 2D grid that the number of horizontal velocity components we store is (nx + 1)ny while the number of vertical velocity components we store is nx(ny + 1). Meanwhile, the number of pressure values we store would just be one per grid cell, i.e., nxny. Any array of labels we store for each grid cell would also be of that size, nxny.

Extending this to 3D, the i and j indices have the same range as above, and with nz representing the depth (number of grid cells in the z direction) of the grid, k ∈ {0, 1, ..., nz - 1}. I hope I have convinced you that the 3D array of pressure values would be a nx ✕ ny ✕ nz array. The 3D array of horizontal velocity components would be a (nx + 1) ✕ ny ✕ nz array, the vertical components would be a nx ✕ (ny + 1) ✕ nz array, and the depth (z direction) components would be a nx ✕ ny ✕ (nz + 1) array.

First, we'll create a basic 3D array class that can take on any reasonable nonnegative integer value (up to the range covered by the type std::size_t in C++) for each of its three dimensions. The Array3D class will be templated so we can store values of any data type inside it. We will start with just a constructor, an accessor ("getter") function that allows us to look up a value at a particular i, j, k index in the array without modifying it, and a mutator ("setter") function that does the same thing as the accessor but allows us to change the value if we want. We'll also add accessor ("getter") functions that return the dimensions, nx, nx, and nx of the array. Note that all of this code will be implemented in a single header file, Array3D.h (as is typical for templated classes, there is no associated .cpp file). I put this into a new subdirectory of our code called incremental0. The next several files of C++ code we create will also go into this same subdirectory. We could dump everything into one directory, but this way we can isolate the different stages of our code development into smaller pieces.

Note we declared the copy constructor and assignment operator as private without implementing them; this prevents accidental misuse of any default copy constructor or assignment operator by any code outside of this class (e.g., code that instantiates and uses this class), reducing the chance of unintended bugs when using this class. We also require that nx_, ny_, and nz_ are constants: they get initialized upon construction of an Array3D object and will never change during the life of the object. The object's data is deleted upon destruction. To test this class and demonstrate how to use it, we create a short file, Array3DTest.cpp, also in the incremental0 directory.

To compile and run both Array3DTest.cpp and Array3D.h, we issue these two commands while we are in the incremental0 directory:

g++ Array3DTest.cpp -o Array3DTest

./Array3DTest

This results in the following output:

Array is 3 x 4 x 5.

table(0, 0, 0) = 7

table(1, 3, 4) = 12

This is what we expected! We created a 3 ✕ 4 ✕ 5 array of integers, assigned 7 to the value at index 0, 0, 0, assigned 12 to the value at index 1, 3, 4, and then looked up the values those two indices and retrieved them, verifying that our mutator and accessor functions seem to be doing what we intended. To clean up after running this test program, I recommend:

rm Array3DTest

Let's leave the Array3D class implementation aside now that we have a basic functionality in place. We'll revisit how to change or add to this class as needed when we implement a fluid simulation algorithm.

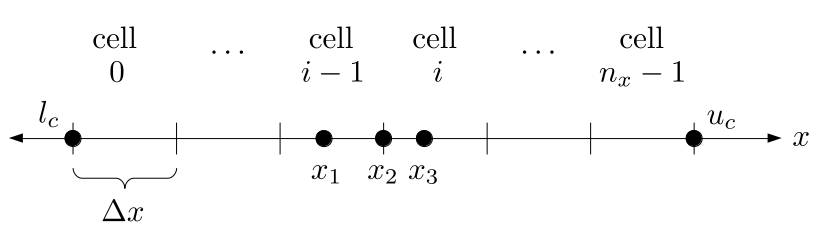

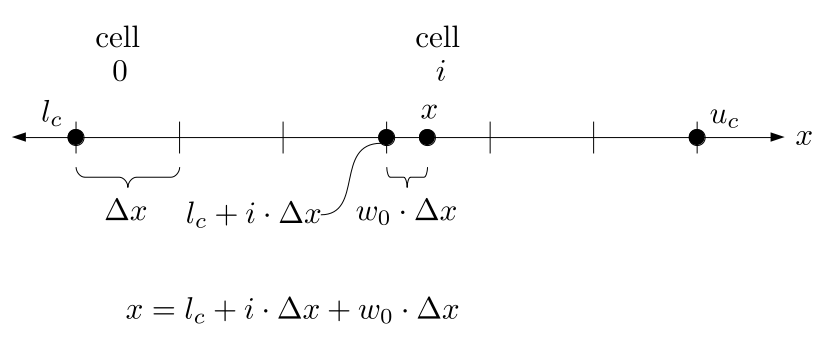

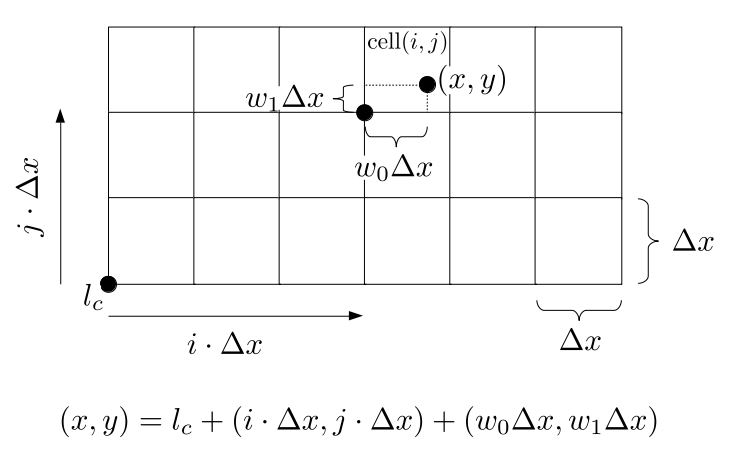

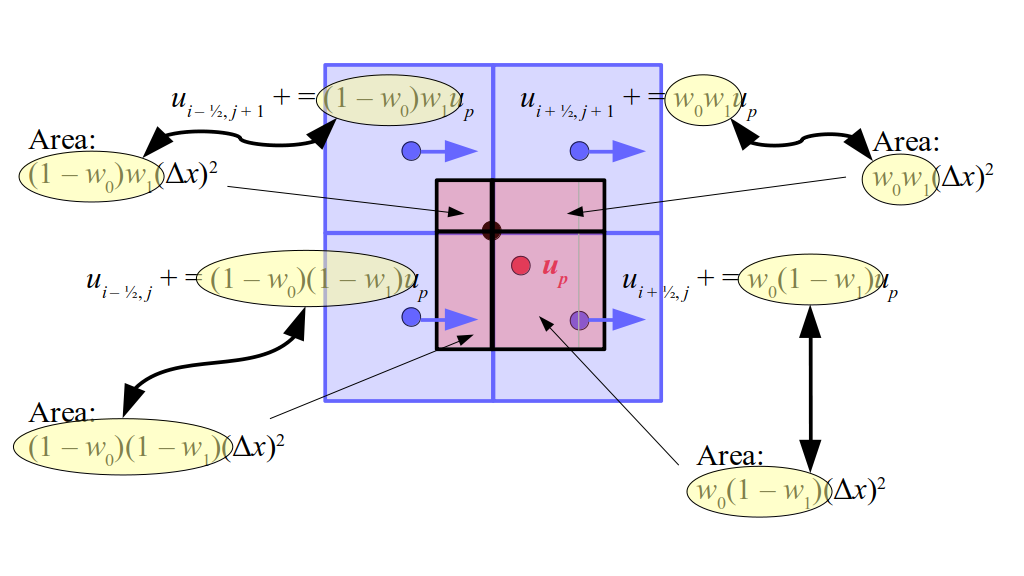

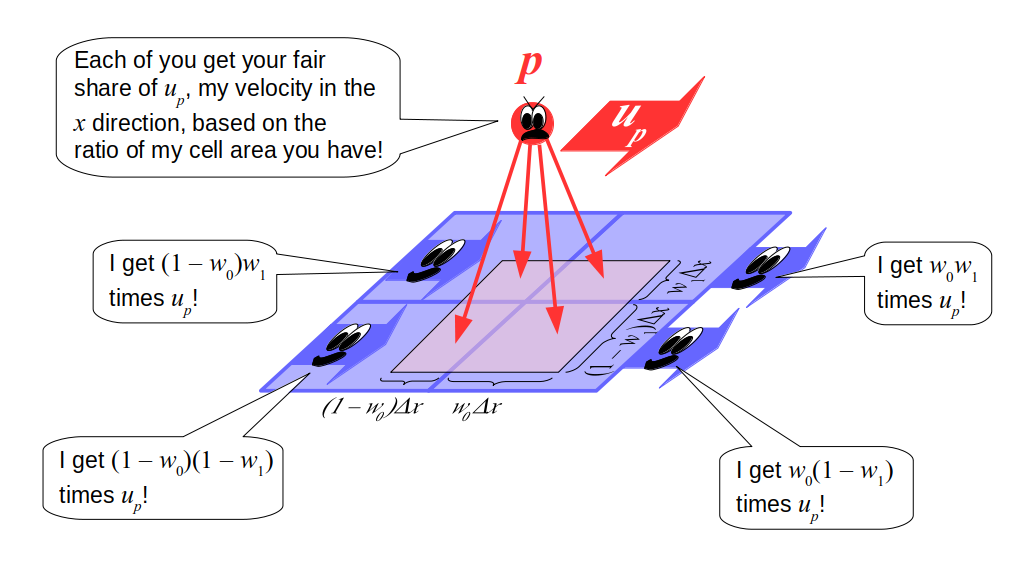

But how do we represent the velocity component arrays using the Array3D data structure? Array3D only accepts integer indices as inputs to its accessor and mutator functions, yet all the indices we use to refer to the velocity components in the staggered grid have ½ in one of the three indices? We use the convention Bridson and Müller-Fischer describe (see, for example, Slide 46 of this presentation). Recall the horizontal components, u, of fluid velocity will be stored in a 3D array with dimensions (nx + 1) ✕ ny ✕ nz. The 3D array for u values in the 3D staggered grid can have u(i, j, k) = ui - ½, j, k, for all values of i from 0 through (nx + 1) - 1 = nx . So, u(0, 0, 0) = u-½, 0, 0, u(1, 0, 0) = u½, 0, 0, and u(nx, 0, 0) = unx - ½, 0, 0. Going from i = 0 through i = nx adds up to a total of nx + 1 cells in a row of the grid. Similarly, for all values of j from 0 through ny, v(i, j, k) = vi, j - ½, k. And for all k from 0 through nz, w(i, j, k) = wi, j, k - ½. In contrast, a 3D array to store fluid pressure values will just have the same dimensions as the grid itself: for i ranging from 0 through nx - 1, j ranging from 0 through ny - 1, and k ranging from 0 through nz - 1, p(i, j, k) = pi, j, k.

The Staggered Grid Data Structure

Now we can put together a basic staggered grid class that contains 3D arrays, as described above, to store pressure values and velocity component values. Here are the header file, StaggeredGrid.h and the implementation file, StaggeredGrid.cpp. We also create a small test program in another file, StaggeredGridTest.cpp. All of these files are also in my incremental0 directory.

We compile this with the following command:

g++ StaggeredGrid.cpp StaggeredGridTest.cpp

Note that both the class definition file, StaggeredGrid.cpp, and test program file, StaggeredGridTest.cpp, must be compiled together. If you only compile the test program, you will get undefined reference errors because the C++ compiler won't be able to find the implementations of the constructor and destructor that are in StaggeredGrid.cpp. Next, run the resulting program:

./a.out

The output should look like:

Created StaggeredGrid:

- p array is 3 x 4 x 5

- u array is 4 x 4 x 5

- v array is 3 x 5 x 5

- w array is 3 x 4 x 6

This matches what we expected: the original grid was 3 ✕ 4 ✕ 5. The horizontal velocity grid has one more horizontal component than the original grid, so it's 4 ✕ 4 ✕ 5. Similarly, the vertical and depth velocity grids also have exactly one dimension with one more slice than the orignal grid.

Grids vs. Particles for Fluid Simulation

We haven't fully clarified why we use a staggered grid for fluid simulation other than something about better derivative-taking. This will get clearer as we flesh out the complete algorithm we'll be implementing for fluid simulation. Critical to our approach is using not only this staggered grid, but also particles in the spirit of what we did earlier when simulating Newtonian mechanics using spheres. What is this madness? We use particles and grids? To do what exactly?

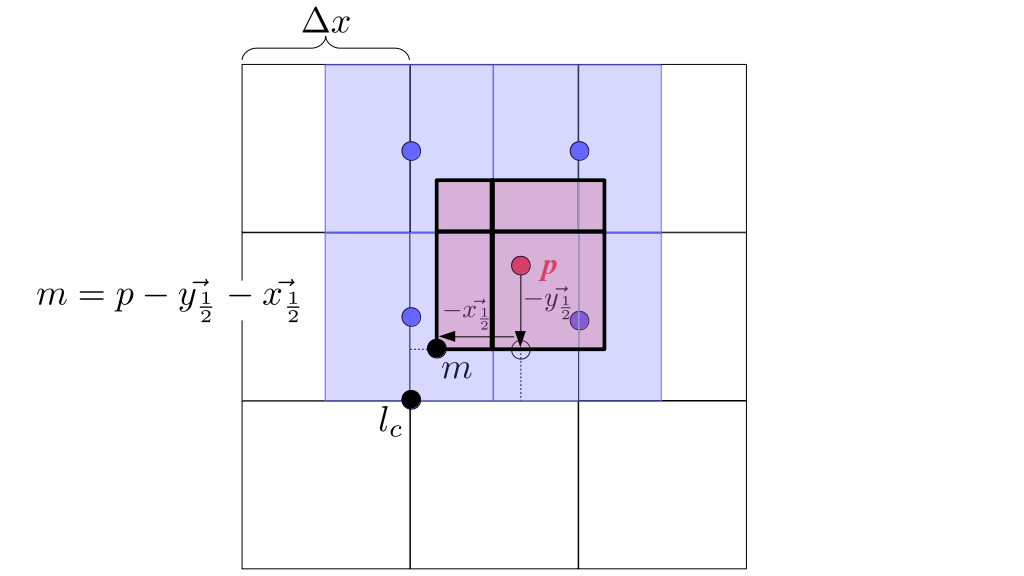

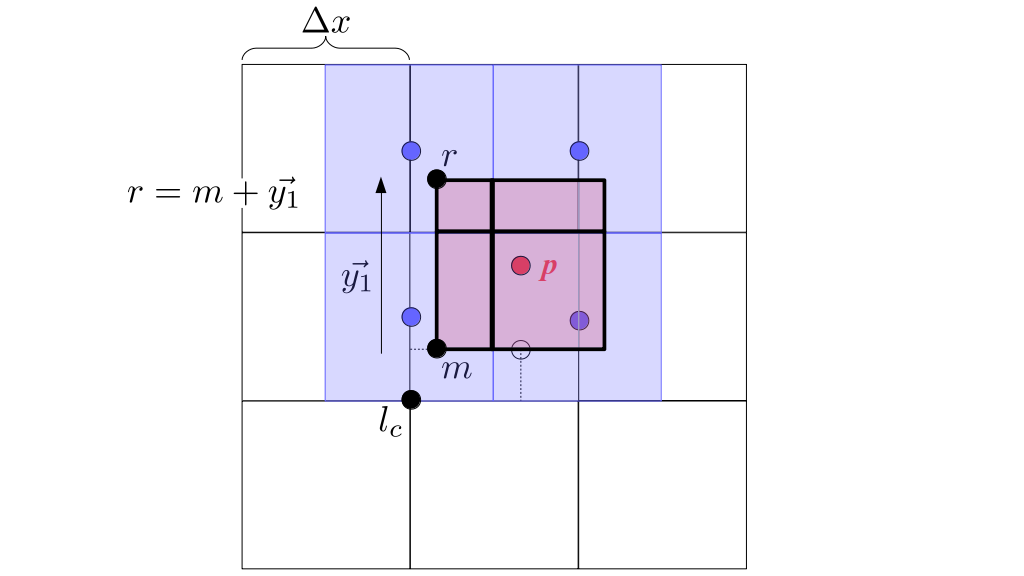

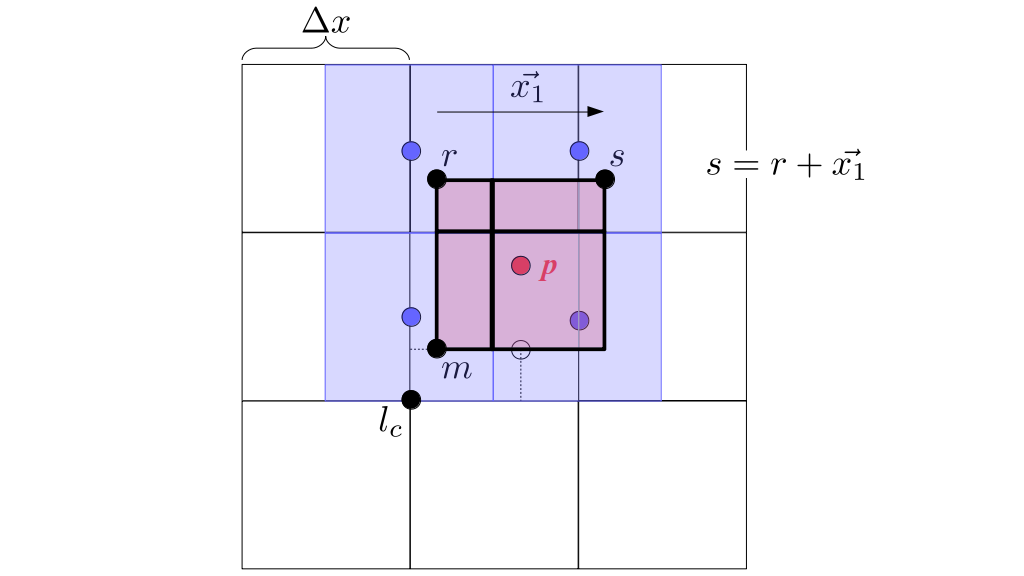

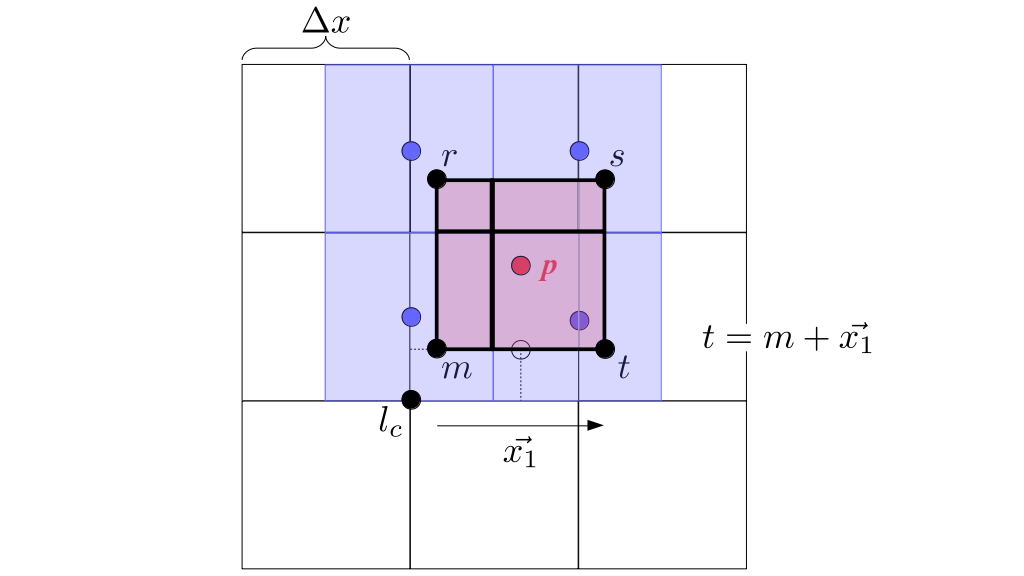

Let's back up a little more before we continue onto more details of our simulation approach. How do you use a computer to simulate the physics of fluids? As we've seen earlier, we can simulate individual particles that feel forces and bounce off of the walls of the viewing frustum and bump into each other. But, it's also common to use grids to simulate fluids--instead of individual particles, you can just keep track of fluid properties like velocity and pressure at specific, fixed points on a grid in space, and not have to track individual particles.